How To Write Tests For External (3rd Party) API Calls with Pytest

Have you ever found yourself trying to test code that integrates with an external 3rd party API, unsure how to move forward?

You’re mocking away but stuck with constant errors, wondering if you’re just testing the mocks instead of the integration.

While unit testing your code is simple, things get trickier when dealing with third-party dependencies.

External APIs are integral to applications, allowing them to exchange data and offer enhanced functionality.

But this comes with the responsibility of ensuring that your code can handle responses reliably — even when the data comes from a service outside your control.

How do you test these interactions to catch issues before they impact users?

Should you connect to the real API or create a controlled environment? Is mocking the right approach, or would a sandbox or fake be better?

In this article, we’ll dive into the core principles of testing external API integrations and explore 10 powerful design patterns along with their pros and cons.

You’ll learn about the trade-offs of different techniques and explore practical methods, like integration testing, mocking, fakes, dependency injection, and tools such as VCR.py and WireMock.

With this, you’ll be equipped to choose the right strategy for your project’s needs, balancing factors like skills, constraints, deadlines, and team capacity.

Software Architecture and Testing Are All About Tradeoffs

Before diving into the concepts, you need to understand something very important.

In software architecture, each decision involves tradeoffs, and testing external APIs is no exception.

You balance reliability, maintainability, and test speed.

Directly testing a live API provides real-world accuracy, but it’s slow, requires network stability, and can be unreliable if the external API changes unexpectedly.

Not to mention quite expensive in terms of time, money, and resources.

Conversely, mocking or creating adapters simplifies tests and makes them faster and isolated but may miss subtle real-world behaviors, especially with evolving APIs.

Mocking also risks coupling tests tightly to implementation details, making refactors complex.

Ultimately, choosing the right strategy depends on the constraints within your company, API’s complexity and importance.

The goal is to strike a balance: design tests that are resilient to API changes while ensuring they reflect critical aspects of real integrations without excessive maintenance.

In this article, we’ll cover several design patterns and anti-patterns and I encourage you to really take the time to read through the pros and cons to make the best decision. There is no one right way to do it.

First let’s setup your local environment.

Local Environment Setup

Set up your local environment to follow along.

Clone the repo.1

$ git clone <REPO_URL>

Create Virtual Environment

Start by creating a virtual environment and activating it.1

2$ python3 -m venv venv

$ source venv/bin/activate

Install Dependencies

1 | $ pip install -r requirements.txt |

Make sure to reference your virtual environment interpreter in your IDE so it picks it up and provides better type hinting.

Source Code

In this article, we’ll integrate with the file.io API.

It has a few endpoints but we’ll keep things super simple and just focus on testing the upload_file endpoint which uploads a file to the external API server and returns a response.

If you wish you can create an account to use authentication and save or view your files in the dashboard but we’re not going to do that here.

Using the requests library we have.

src/file_uploader.py1

2

3

4

5

6

7

8

9

10

11

12

13

14import requests

def upload_file(file_name):

with open(file_name, "rb") as file:

response = requests.post("https://file.io", files={"file": file})

response.raise_for_status()

upload_data = response.json()

if response.status_code == 200:

print(f"File uploaded successfully. Upload data: {upload_data}")

return upload_data

else:

raise Exception("File upload failed.")

This simple code makes a POST request with a file_name argument to the REST API and returns the response.

Let’s see what the test looks like.

Pattern #1 — Test The Real API

The most simple way to test this is to use the real API.

tests/unit/test_pattern1.py1

2

3

4

5

6

7

8

9

10

11"""Pattern 1 - Full Integration Test"""

from src.file_uploader import upload_file

def test_upload_file():

file_name = "sample.txt"

response = upload_file(file_name)

assert response["success"] is True

assert response["name"] == file_name

assert response["key"] is not None

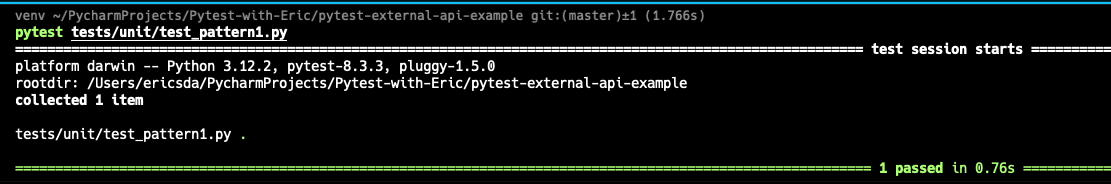

Simple. Let’s run it.

First, create a simple TXT file in the same folder and call it sample.txt .1

$ pytest tests/unit/test_pattern1.py

Looks good, but let’s understand the tradeoffs.

Pros

- Realistic Testing: Provides a true representation of how your application will function in production, including response times, data structures, and error handling.

- Full Integration Confidence: Ensures your application is compatible with any updates or changes on the provider’s end, which is particularly useful for mission-critical integrations.

- No Mocks Required: No mocks, meaning tests are simpler, and you avoid the risk of creating brittle tests based on implementation details or incorrect assumptions about the API’s behavior.

Cons

- Slow and Unreliable: Subject to network latency and availability issues, meaning slower tests and potential flakiness.

- Rate Limits and Costs: Many APIs enforce rate limits or charge fees based on usage, which can quickly add up if you’re running tests frequently.

- Non-Deterministic: Changing data or states between requests can make tests non-repeatable and harder to debug.

Let’s move on to Mocking, which in my opinion is an Anti-Pattern for testing 3rd party API integrations.

Let’s learn what NOT to do.

Pattern #2- Mocking/Patching The Requests Library

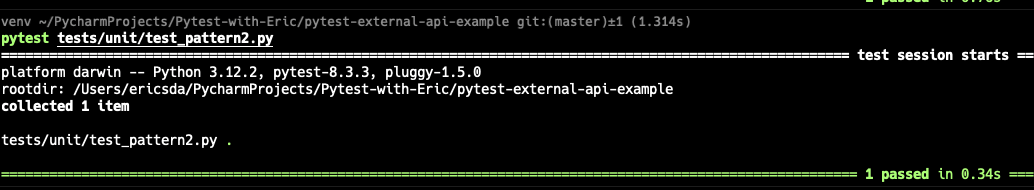

The most straightforward way is to Mock the requests library.

This ensures that your tests don’t call the Real API and cause any of the issues we highlighted above.

tests/unit/test_pattern2.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27"""Pattern 2 - Mock the Request Library"""

from unittest.mock import patch, Mock, ANY

from src.file_uploader import upload_file

def test_upload_file():

file_name = "sample.txt"

stub_upload_response = {

"success": True,

"link": "https://file.io/TEST",

"key": "TEST",

"name": file_name,

}

with patch("src.file_uploader.requests.post") as mock_post:

mock_post_response = Mock()

mock_post_response.status_code = 200

mock_post_response.json.return_value = stub_upload_response

mock_post.return_value = mock_post_response

response = upload_file(file_name)

assert response["success"] is True

assert response["link"] == "https://file.io/TEST"

assert response["name"] == file_name

mock_post.assert_called_once_with("https://file.io", files={"file": ANY})

In this code, we’re testing the upload_file function without making an actual API request by using a mock for requests.post.

- First, we define

stub_upload_response—an expected response that mimics what the real API would return whenupload_fileis called. - We use

patchonrequests.postwithinsrc.file_uploader. This temporary patch means any calls torequests.postinside this context returns a controlled mock instead of making a real HTTP request. - We create

mock_post_response, aMockobject that simulates the real response fromrequests. We set itsstatus_codeandjsonmethod to return ourstub_upload_response. - When

upload_fileruns, it now receives our mock response instead of a real one. The test then asserts that the function behaves as expected, checking thesuccessstatus and other key values.

If I may ask you, what’s wrong with this or why is this undesirable?

Let’s say your boss tells you to add more functionality. Perhaps the download_file , update_fileor delete_file functionality.

Imagine mocking the requests.post for each of these files. Think about the setup and teardown too, you’ll need to upload files before downloading or deleting them.

Mocking in each of these contexts can get super complex, lose touch with reality, and be extremely hard to maintain or debug.

Let’s review the pros and cons.

Pros

- No Change to Client Code: Mocking allows you to test API interactions without modifying the actual client code, making it straightforward to implement and maintain within the function being tested.

- Low Effort: Mocking

requestsis relatively quick and easy, especially for simple functions, saving time and effort in setting up test infrastructure. - Familiar to Many Developers: Most developers are comfortable with mocking libraries, making this approach accessible without a steep learning curve.

Cons

- Tightly Coupled to Implementation: Tests depend on specific details of the requests library. Any changes to how requests are made (e.g., switching from

requests.gettorequests.Session().get) can break the test, even if the functionality remains the same. - Brittle and Hard to Maintain: As the requirement grows in complexity or specification, mock setup becomes increasingly cumbersome and hard to manage, especially if many tests rely on similar mocks.

- Extra Effort to Patch Every Test: You need to remember to apply

@patchor use a context manager in every test that may trigger API calls, increasing test setup requirements. - Potential for Mixing Business Logic and I/O Concerns: This approach can make it easy to unintentionally mix business logic with I/O behavior, complicating the test’s purpose and leading to confusing test setups.

- Likely Need for Integration and E2E Tests: Since mocks don’t guarantee that the code will work with the actual API, you often need separate integration and end-to-end tests to validate real API behavior.

As you can see I’m not a big fan of this approach for anything other than very simple code.

So what’s the solution?

Let’s look at other ideas to find one that’s more substantial.

Pattern #3 — Build an Adaptor/Wrapper Around The 3rd Party API

An interesting idea I got from Harry Percival’s YouTube video — Stop Using Mocks (for a while) — was the concept of an adaptor.

Harry describes it as a wrapper around the 3rd party API or around the I/O.

The wrapper is built to support your interactions with the 3rd party API and is written in your own words (not based directly on the 3rd party Swagger).

It abstracts the low level stuff (like requests.post or requests.get ) from the end user, making it easy to fake, mock or even replace the API if desired.

Let’s see how we could write our wrapper.

src/file_uploader_adaptor.py1

2

3

4

5

6

7

8

9

10import requests

class FileIOAdapter:

API_URL = "https://file.io"

def upload_file(self, file_path):

with open(file_path, "rb") as file:

response = requests.post(self.API_URL, files={"file": file})

return response.json()

In this very simple adaptor, we have a FileIOAdaptor class that contains the upload_file method. This method open a file and uploads its contents to the 3rd party API.

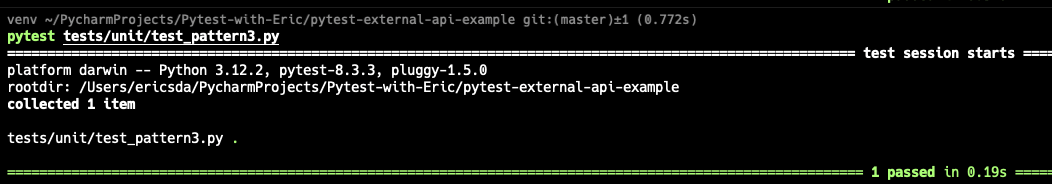

How could we test it?

We’ll use mock/patch here as well but instead of mocking the requests.post library we’ll patch the upload_file method of the FileIOAdaptor class.

tests/unit/test_pattern3.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17"""Pattern 3 - Create an Adaptor Class and Mock It"""

from unittest import mock

from src.file_uploader_adaptor import FileIOAdapter

def test_upload_file():

with mock.patch(

"src.file_uploader_adaptor.FileIOAdapter.upload_file"

) as mock_upload_file:

mock_upload_file.return_value = {"success": True}

adapter = FileIOAdapter()

response = adapter.upload_file("sample.txt")

assert response == {"success": True}

mock_upload_file.assert_called_once_with("sample.txt")

If you observe the mocking above, it’s way simpler and has fewer mocks.

We only mock the desired method and use a stub response — {"success": True} .

Then we create an instance of the FileIOAdaptor class and call it’s upload method in this context, which will return the mock_upload_file object.

We then assert its interaction.

You can see how much easier this is compared to mocking low-level interactions with the request library.

Pros

- Control Over Mocking: By mocking only the adapter, you avoid mocking external dependencies like

requestsdirectly. This approach reduces risk and simplifies test setup. - Decouples Business Logic from External API Details: The adapter abstracts low-level API calls, so if the API changes or a new provider is used, you only update the adapter, leaving business logic untouched.

- Enhanced Maintainability and Confidence: Testing against your own API terms rather than external specifics makes tests less brittle and more future-proof, allowing for safer refactoring.

Cons

- Additional Code and Layer: Introducing an adapter adds an extra layer and increases code complexity, especially for simple integrations.

- Potential Overhead for Simple Cases: This pattern may feel like overkill for straightforward API interactions, adding unnecessary abstraction where direct calls could suffice.

Pattern #4 —Dependency Injection

Next, let’s look at another design pattern — dependency injection.

Instead of having our business logic (upload functionality) baked into the FIleUploader class, how about we pass it as a dependency.

src/file_uploader_adaptor_dep_injection.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22from abc import ABC, abstractmethod

import requests

class FileUploader(ABC):

def upload_file(self, file_path: str) -> dict:

raise NotImplementedError("Method not implemented")

class FileIOUploader(FileUploader):

API_URL = "https://file.io"

def upload_file(self, file_path: str) -> dict:

with open(file_path, "rb") as file:

response = requests.post(self.API_URL, files={"file": file})

return response.json()

def process_file_upload(file_path: str, uploader: FileUploader):

response = uploader.upload_file(file_path)

return response

Here we define an abstract method — FileUploader which contains a metod upload_file that needs to be implemented by the FileIOUploader .

Our business logic process_file_upload is simple and just a file path and uploader object.

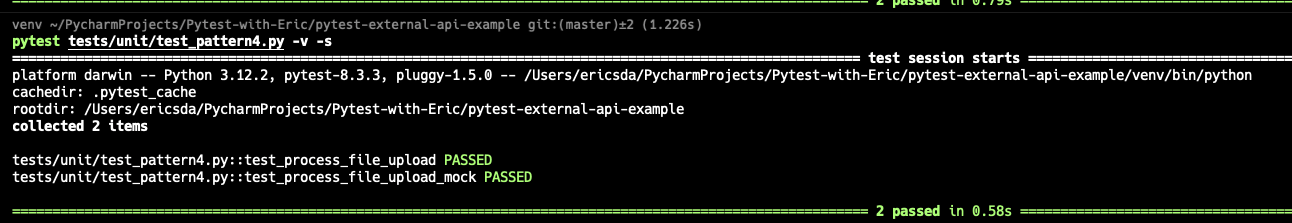

What effect does this have on our tests? Let’s see.

tests/test_pattern4.py1

2

3

4

5

6

7

8

9

10

11

12"""Pattern 4: Dependency Injection"""

from src.file_uploader_dep_injection import process_file_upload, FileIOUploader

def test_process_file_upload():

file_name = "sample.txt"

file_io_uploader = FileIOUploader()

response = process_file_upload(file_path=file_name, uploader=file_io_uploader)

assert response["success"] is True

assert response["name"] == file_name

assert response["key"] is not None

Fairly clean, but it’s still using the real API. What should we do?

We can now easily pass a Mock object as a dependency.

tests/unit/test_pattern4.py1

2

3

4

5

6

7

8

9

10

11

12

13from unittest import mock

def test_process_file_upload_mock():

mock_uploader = mock.Mock()

mock_uploader.upload_file.return_value = {

"success": True,

"link": "https://file.io/abc123",

}

result = process_file_upload("test.txt", uploader=mock_uploader)

assert result["success"] is True

assert result["link"] == "https://file.io/abc123"

mock_uploader.upload_file.assert_called_once_with("test.txt")

We create a Mock object and specify that it has a method upload_file that returns a stubbed response.

We then pass this mock object as a dependency to our process_file_upload function.

The fact that we “inject” the dependency allows us to decide during test or runtime, whether to use the real FileUploader API or a Mock object, making our tests super flexible.

Tradeoffs?

Pros

- Flexible Testing Setup: Dependency injection allows you to swap in mock objects easily, making it simple to test different scenarios without relying on the real API.

- Decouples Business Logic from Dependencies: Business logic in

process_file_uploaddoesn’t depend on specific implementation details ofFileIOUploader, making the code more modular and adaptable to changes. - Encourages Reusability and Cleaner Code: This approach promotes reusable components and cleaner, testable code by separating logic and dependencies.

Cons

- Additional Complexity: Introducing abstract classes and interfaces adds layers, which can feel excessive for simpler use cases.

- More Setup for Simple Integrations: Dependency injection may seem overkill for straightforward functionality, especially when there’s no need to substitute dependencies.

- Potential Overhead in Small Projects: This pattern may add overhead to smaller projects that don’t require high test flexibility or interchangeable dependencies.

Pattern #5 — Use a Fake with Dependency Injection

The last pattern (Dependency Injection) is really good but still uses mocks.

What if you wanted to avoid mocks completely?

You can use a Fake instead.

Fake is a different kind of test double to mocks and patches — which is an in-memory representation of the object you’re trying to replace. That’s my informal definition.

Mocks are simple objects that can take any method and you can assert interactions on them.

Fakes build a simplified or functional version of the real thing you’re replacing. This could be a Class, Database, External API or anything else.

Let’s see how to implement fakes.

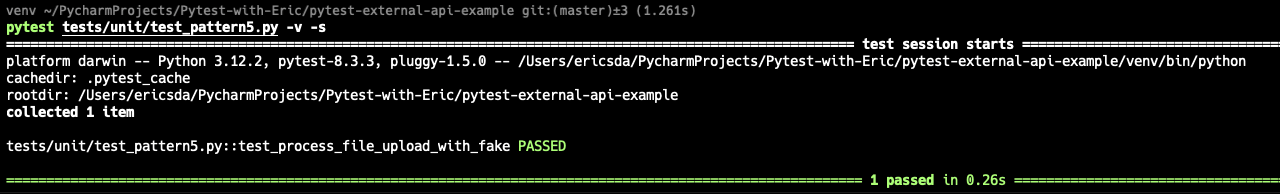

tests/unit/test_pattern5.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22"""Pattern 5: Dependency Injection + Fake - Create a Fake Object and Inject It"""

from src.file_uploader_dep_injection import process_file_upload

class FakeFileIOUploader:

def __init__(self):

self.uploaded_files = {}

def upload_file(self, file_path: str) -> dict:

# Simulate uploading by "saving" the file's path in a dictionary

self.uploaded_files[file_path] = f"https://file.io/fake-{file_path}"

return {"success": True, "link": self.uploaded_files[file_path]}

def test_process_file_upload_with_fake():

fake_uploader = FakeFileIOUploader()

result = process_file_upload("test.txt", uploader=fake_uploader)

assert result["success"] is True

assert result["link"] == "https://file.io/fake-test.txt"

assert "test.txt" in fake_uploader.uploaded_files

Here we have a class — FakeFileIOUploader which creates an in-memory dict of uploaded files upon initialization.

The upload_file method adds to the dict.

Using our dependency injection design, we can easily inject the fake into our test.

Fakes can use Lists, Dicts or even SQLite in-memory databases for quick operations.

So what’s the catch?

Pros

- More Readable and Realistic Tests: Fakes simulate the behavior of the real object, making tests more intuitive and closer to real-world scenarios than mocks.

- Encourages Better Design: Building a fake forces you to think carefully about how your classes and dependencies are structured, often leading to cleaner, modular code.

- No Dependency on External APIs: Since fakes operate in memory (e.g., using dictionaries or lists), you avoid the need for real API calls, improving test reliability and speed.

Cons

- Increased Code for Tests: Creating a good functional fake requires more initial setup and maintenance, especially for complex APIs, which can lead to larger, more involved test code.

- Syncing Complexity: The fake must stay up-to-date with the real API’s behavior, so any changes to the API may require updates to the fake, adding maintenance overhead.

- Potential Overhead for Small Tests: For simple API interactions, a full-featured fake may feel like overkill, introducing unnecessary complexity where a mock might suffice.

With dependency injection — we can easily run our code with different options — real API, mocks and even fakes to test our system — all without changing out process_upload_file method.

Neat isn’t it?

Pattern #6 — Use a Sandbox API

Using a Sandbox API is a realistic approach for testing external API interactions.

Many third-party providers, like Stripe, offer sandbox environments that mimic production settings, allowing you to test against the actual API behavior without impacting real data or incurring charges.

This setup provides a more reliable integration test compared to using live APIs, as it’s designed specifically for testing.

However, while sandbox testing is safer than hitting production, it’s generally slower than using fakes or mocks and you have to remember to clean up to avoid having tons of messy data in your sandbox.

Pros

- Realistic Testing: Sandbox APIs closely resemble production, allowing you to test with real API structures, error handling, and response times without impacting live data.

- Fewer Maintenance Burdens: Since you’re not creating test doubles or mocks, there’s less setup and no need to update test objects whenever the API changes.

- Accurate Data Validation: Sandboxes enable end-to-end testing of data validation and response handling in a safe environment, improving confidence in integration accuracy.

Cons

- Slower Test Execution: Sandbox environments involve real network calls, which are inherently slower than tests with fakes or mocks.

- Limited Access or Features: Some providers restrict sandbox functionality or rate-limit usage, which can constrain testing, especially for edge cases.

- Reliability Concerns: Although more stable than live APIs, sandbox environments can still experience downtime or occasional inconsistencies especially if not kept up to date with the real API.

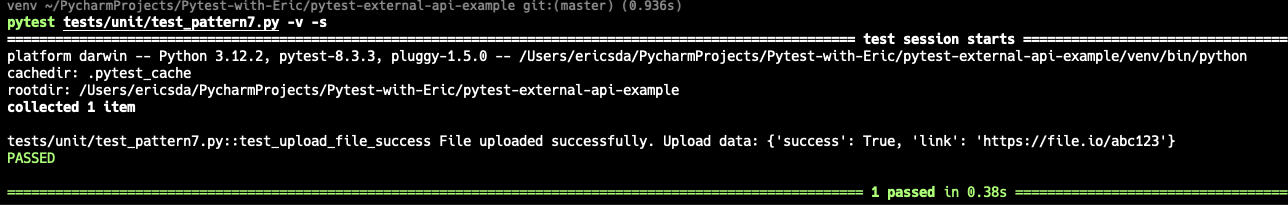

BONUS Pattern #7— Use the responses Library

While the above 6 patterns give you plenty to play with, I thought I’d include a few BONUS test patterns as well.

The responses library from Sentry is a neat mocking wrapper around requests that allows you to easily test it.

Here’s how you could implement it.

tests/unit/test_pattern7.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19"""Pattern 7: Mocking external API calls using responses library"""

import responses

from src.file_uploader import upload_file

def test_upload_file_success():

# Mock the successful response from file.io

responses.add(

responses.POST,

"https://file.io",

json={"success": True, "link": "https://file.io/abc123"},

status=200,

)

result = upload_file("sample.txt")

assert result == {"success": True, "link": "https://file.io/abc123"}

assert responses.calls[0].response.status_code == 200

You can test all kinds of interactions with this which is quite cool.

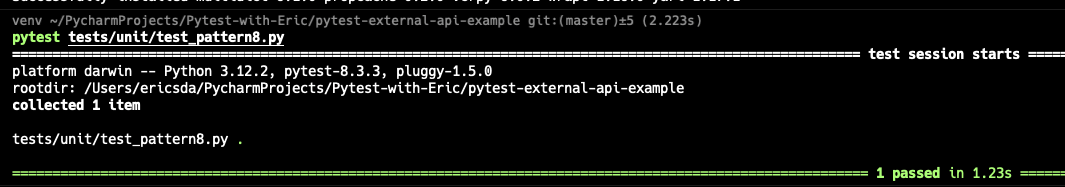

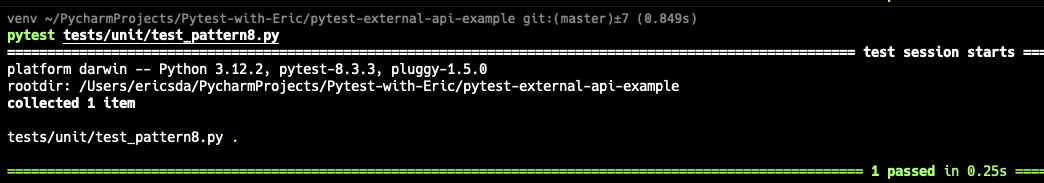

BONUS Pattern #8— Use VCR.py

A very interesting concept I recently discovered (and probably long exists) is to use VCR.py.

It records the HTTP interactions in a cassette file (YAML format) and replays it during future test runs.

This allows you to test your function’s behavior without making repeated real API calls.

tests/unit/test_pattern8.py1

2

3

4

5

6

7

8

9

10

11

12

13import vcr

from src.file_uploader import upload_file

def test_upload_file():

file_name = "sample.txt"

response = upload_file(file_name)

assert response["success"] is True

assert response["name"] == file_name

assert response["key"] is not None

With this setup, the test will use the real API call only once, then rely on the recorded response, making tests faster and more reliable.

Initial Run — 1.23 secs

2nd Run — 0.25 secs

The @vcr.use_cassette decorator applies vcr.py to the test function and specifies upload_file.yaml as the cassette file.

The first time this test runs, vcr.py will save the HTTP request and response in this file.

On subsequent runs, it will replay the saved response without making a real API call.

Pros

- Realistic Testing Without Network Dependence:

vcr.pycaptures real API responses, providing realistic data while avoiding the need for repeated network calls. - Improved Test Speed and Reliability: Once the request is recorded in a cassette, subsequent tests are faster and less prone to flakiness, as they don’t rely on external API availability.

- Reduced API Costs and Rate Limit Issues:

vcr.pyminimizes the need for live API calls, which can reduce costs and prevent hitting rate limits. - Easily Shareable Test Data: Cassettes store API responses in a readable YAML format, making it easy to inspect or share data with team members for consistency.

Cons

- Outdated Cassettes with API Changes: If the API’s behavior or response format changes, existing cassettes can become outdated.

- Initial Setup Complexity: Configuring and managing cassette files adds complexity, especially for larger projects with many endpoints, where cassette organization can become challenging.

- Limited Coverage for Dynamic Scenarios: For highly dynamic data,

vcr.pymay not capture all possible response variations, which can lead to incomplete test coverage or require frequent cassette updates. - Possible Overhead in Managing Cassette Files: Accumulating many cassettes can add overhead, especially if updates are frequent.

Nevertheless I still found the concept interesting.

BONUS Pattern #9 — Contract Testing

Contract testing is a testing strategy that verifies interactions between services or components meet predefined “contracts.”

By ensuring each side adheres to an agreed-upon structure (or contract), you reduce integration issues and increase confidence in complex, distributed systems.

In our upload_file, your contract would define that a POST request is sent to https://file.io with the file data, and that the API responds with a JSON object containing success, link, key, and name keys.

We would then use a contract testing tool like Pact that allows you to define a contract, verify that your code produces requests that meet this contract, and validate responses against it.

Covering contract testing is outside the scope of this article but if you’re interested let me know and I’ll cover it in detail.

Pros

- Ensures Request and Response Consistency: Validates that

upload_fileconforms to the expected API structure. - Early Detection of API Changes: If the provider changes the API, this test will catch it, preventing runtime errors.

- Documentation: Acts as documentation for expected API interactions, useful for both your team and the API provider.

- Reduces Flakiness: Less reliance on brittle mocks, especially for API changes.

Cons

- Setup Complexity or Overhead: Requires installing and setting up a contract testing tool.

- Initial Investment: More setup than simple mocks but provides longer-term stability.

- Provider Coordination: For the full benefit, the API provider should verify the contract, which may require extra coordination.

BONUS Pattern #10 — Use WireMock

Another interesting concept I came across in Szymon Miks’ blog was Wiremock.

A lot of people have recommended it on Reddit so I thought I’d give it a shot.

From the website,

WireMock frees you from dependency on unstable APIs and allows you to develop with confidence. It’s easy to launch a mock API server and simulate a host of real-world scenarios and APIs — including REST, SOAP, OAuth2 and more.

Let’s see how to use it. They also have a Python Library called python-wiremock which offers a Python interface to Wiremock.

To use it you’ll need to install Docker and have it running.

You’ll also need 2 dependencies — wiremock and testcontainers which are included in the requirements.txt file of the repo.

Before we write our test, we’ll make a small change to our application code. We’ll pass the API URL as an argument instead of hardcoding it.

This allows us to easily use wiremock without the need to mock or patch the API URL, so our application uses that instead of the real one.

src/file_uploader.py1

2

3

4

5

6

7

8

9

10

11

12

13imnport requests

def upload_file_param(file_name, base_url):

with open(file_name, "rb") as file:

response = requests.post(base_url, files={"file": file})

response.raise_for_status()

upload_data = response.json()

if response.status_code == 200:

print(f"File uploaded successfully. Upload data: {upload_data}")

return upload_data

else:

raise Exception("File upload failed.")

Let’s write our tests.

tests/unit/test_pattern10.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41from src.file_uploader import upload_file_param

import pytest

from wiremock.testing.testcontainer import wiremock_container

from wiremock.constants import Config

from wiremock.client import *

def wiremock_server():

# Set up WireMock server with mappings

with wiremock_container(secure=False) as wm:

# Set base URL for WireMock admin (only for WireMock setup purposes)

Config.base_url = wm.get_url("__admin")

# Map the upload endpoint for a successful response

Mappings.create_mapping(

Mapping(

request=MappingRequest(method=HttpMethods.POST, url="/"),

response=MappingResponse(

status=200,

json_body={

"success": True,

"link": "https://file.io/TEST",

"key": "TEST",

"name": "sample.txt",

},

),

persistent=False,

)

)

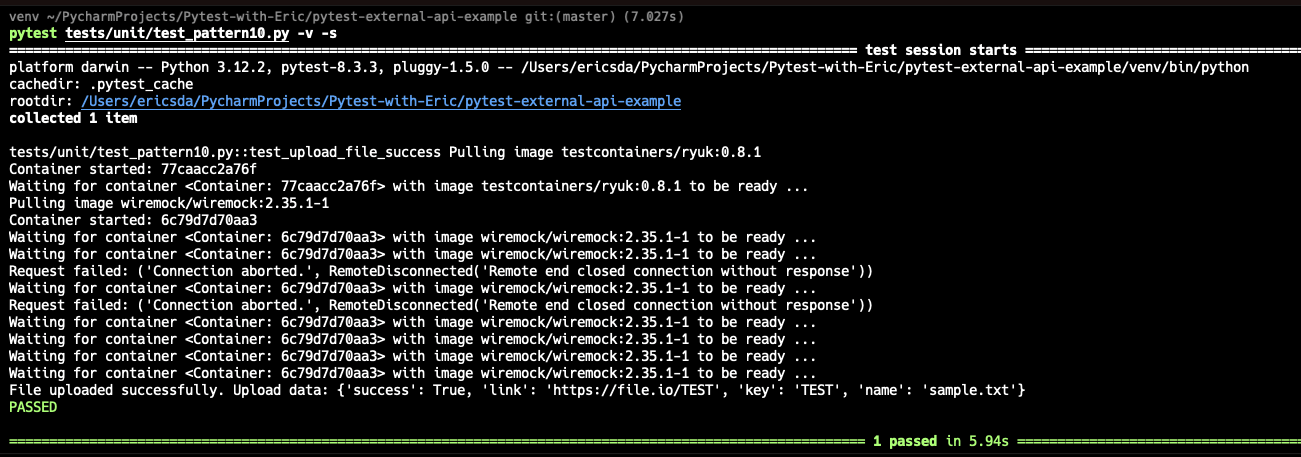

yield wm # Yield the WireMock instance for the tests

def test_upload_file_success(wiremock_server):

response = upload_file_param("sample.txt", wiremock_server.get_url("/"))

assert response["success"] is True

assert response["link"] == "https://file.io/TEST"

assert response["name"] == "sample.txt"

assert response["key"] == "TEST"

- The

wiremock_serverfixture starts a WireMock container to act as a mock server for the API. - It configures

Config.base_urlto point to the WireMock admin endpoint, enabling setup of endpoint mappings. - A mapping is created for a

POSTrequest to the/URL, simulating a successful response. - This fixture yields the running WireMock server, making it available to the test.

- The test function

test_upload_file_successuseswiremock_serverto callupload_file_paramwith the mock server’s URL.

We run our test like a normal call, only difference is we inject the wiremock_server URL instead of the real one.

Fairly bulky but it’s a decent way to simulate real-world scenarios.

Tradeoffs?

Pros

- Realistic API Simulation: WireMock closely simulates actual API responses, allowing for precise control over scenarios like errors or timeouts.

- Consistency: It provides stable responses across test runs, avoiding flaky tests and dependency on live API availability.

- Complex Scenario Handling: Supports conditional responses, delays, and faults, helping test edge cases and error handling.

- Automated and Isolated Testing: Easily integrates into CI pipelines for isolated, automated testing without affecting the live API.

Cons

- Setup Complexity: Requires configuration for each endpoint and response scenario, which can be time-consuming to maintain.

- Fixed Scenarios: Simulations may become outdated if the real API changes, and they miss any unplanned behaviors.

- Resource Intensive: Running WireMock servers, especially in CI, consumes more resources than lighter mocking options.

- Not Fully End-to-End: Although it simulates the API, WireMock doesn’t cover the actual live API integration, which may still need testing.

Conclusion

In this quite long but important article, you learned many ways to test 3rd party integrations.

You explored several techniques, starting with real tests, then mocking, fakes, dependency injection, adaptors and sandbox testing.

As a bonus you also learned to use the responses library, vcr.py and wiremock for simulating external APIs locally.

All these techniques are solid (except pure mocking of the requests library) and offer a strong foundation to testing external API integrations.

The most important concept in this entire article is that of tradeoffs (pros and cons) — for every decision you make, there are pros and cons.

Choose the one with the pros you need and the cons you can manage.

Happy Testing!

If you have any questions or would like to share your experience, please get in touch. I’d love to hear how it’s working for you!

Additional Reading

This article was heavily inspired by Harry Percival’s talk at Pycon on Stop Using Mocks (for a while) and the corresponding Blog Post - Writing tests for external API calls.

Special thanks to Szymon Miks - personal blog for his interesting ideas too.

- GitHub Repo Used in this Article

- File.IO API Documentation

- How Pytest Fixtures Can Help You Write More Readable And Efficient Tests

- Pytest Conftest With Best Practices And Real Examples

- What is Setup and Teardown in Pytest? (Importance of a Clean Test Environment)

- Common Mocking Problems & How To Avoid Them (+ Best Practices)

- How To Mock In Pytest? (A Comprehensive Guide)

- Mocking Vs. Patching (A Quick Guide For Beginners)