How To Overwrite Dynaconf Variables For Testing In Python

Debugging tests is frustrating.

It’s even worse when you find out that your environment variables or config settings were wrong, causing tests to fail.

Even worse, you accidentally ran tests against your production database!

Managing configurations across multiple environments — development, testing, staging, and production — can quickly become a headache.

Hence robust configuration management is essential, especially in frameworks like Flask or Django.

But how do you separate test settings from production?

The solution? Dynaconf — a powerful tool for Python configuration management.

This article will show you how to configure Dynaconf for testing, ensuring your test environment uses different settings from development or production.

You’ll learn how to set up Dynaconf with Pytest Fixtures to ensure isolation and manage different environment variables between tests.

We’ll also briefly touch on how to seamlessly integrate Dynaconf with Flask and Django.

By the end, you’ll be well equipped to confidently handle configuration in your tests and application, debug common issues, and focus more on writing application code than debugging configuration problems.

Let’s dive in!

What is Dynaconf and Why use it?

For those of you already well acquainted with Dynaconf, feel free to move onto the next section.

Dynaconf is a flexible, multi-environment configuration management tool for Python applications.

Traditionally, developers handled configurations using separate files for each environment (like settings.py or .env files).

While this works, it often requires manual changes at runtime or hardcoding values for different environments.

This approach is prone to errors and difficult to scale as the number of environments grows.

Dynaconf simplifies this process by allowing centralized configuration management across multiple environments (development, testing, production) with a single settings file.

It supports environment variables, .env files, and even hierarchical settings stored in JSON, YAML, or TOML.

With its ability to switch between environments seamlessly, handle secrets, and validate settings, Dynaconf makes configuration management easy, especially when using web frameworks like Flask or Django.

Most importantly it follows a good design principle - Keep config separate from code.

How To Use Dynaconf — Quick Example

Let’s see a simple example on how to use Dynaconf.

Then we’ll talk about how to configure it with Pytest such that it always uses a test setting instead of your development or production. Less risk of contamination or worse (total wipeout).

Let’s set up your environment first.

Setup Local Environment

Clone the repo or feel free to set up one.1

$ git clone <REPO_URL>

Create Virtual Environment

Start by creating a virtual environment and activating it.1

2$ python3 -m venv venv

$ source venv/bin/activate

Install Dependencies

1 | $ pip install -r requirements.txt |

Make sure to reference your virtual environment interpreter in your IDE so it picks it up and provides better type hinting.

Alternatively, if you don’t want to use a requirements file, just install the packages1

2$ pip install dynaconf

$ pip install pytest

Now that you have it set up let’s see the example code.

Initialize Dynaconf

To initialize Dynaconf run,1

$ dynaconf init -f toml

It will create the following1

2

3

4.

├── config.py # Where you import your settings object (required)

├── .secrets.toml # Sensitive data like passwords and tokens (optional)

└── settings.toml # Application settings (optional)

You can read more about how to configure the CLI commands here.

You can manually create the files too.

After this, your repo should look something like this.1

2

3

4

5

6

7

8

9

10

11

12

13

14.

├── .gitignore

├── .python-version

├── pytest.ini

├── requirements.txt

├── settings.toml

├── src

│ ├── __init__.py

│ ├── app.py

│ └── config.py

└── tests

├── __init__.py

├── conftest.py

└── test_app.py

Dynaconf Configuration

Place the settings.toml file at the root of the repo and define your settings.

For example, in this case1

2

3

4

5

6

7

8

9

10

11[development]

DEBUG = true

DATABASE_URL = "sqlite:///development.db"

[testing]

DEBUG = true

DATABASE_URL = "sqlite:///testing.db"

[production]

DEBUG = false

DATABASE_URL = "postgresql://user:pass@localhost/db"

We have 3 environments — development , testing and production .

It uses a simple SQLite database URL for dev and testing and a postgres DB URL for production.

I’ve kept the code simple so we can focus on how to use Dynaconf.

Next,

In src/config.py1

2

3

4

5

6

7

8from pathlib import Path

from dynaconf import Dynaconf # type: ignore

settings = Dynaconf(

root_path=Path(__file__).parent,

envvar_prefix="DYNACONF",

settings_files=["settings.toml", ".secrets.toml"],

)

This creates a Dynaconf settings object. The complete list of accepted configuration arguments can be found here.

Application Code

Now that we’ve defined our config settings, let’s see how to use it in our application.

src/app.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16from dynaconf import settings

def get_database_url():

"""Returns the current DATABASE_URL based on the active environment."""

return settings.DATABASE_URL

def is_debug_mode():

"""Returns whether DEBUG mode is enabled based on the active environment."""

return settings.DEBUG

if __name__ == "__main__":

print(get_database_url())

print(is_debug_mode())

This simple application prints the config.

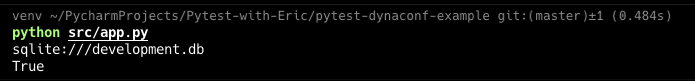

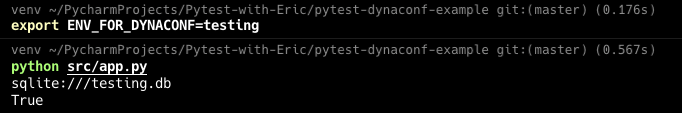

Running Application Code

Let’s run the application code first to make sure it works before running tests.

This is contrary to my preferred style of TDD but that’s not important here.

Running the app,1

$ python src/app.py

You can see it’s using the development environment.

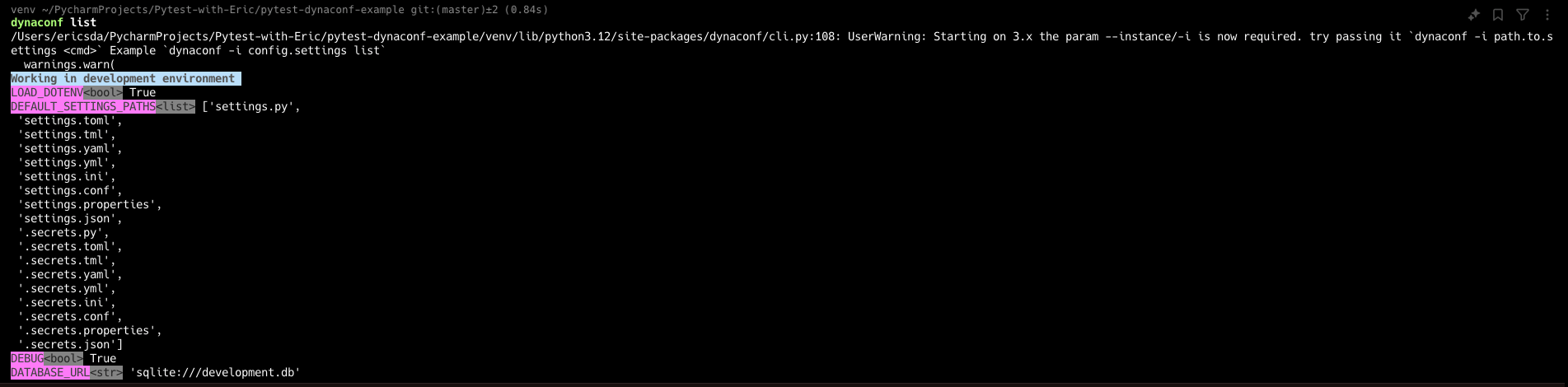

We can also check the current active environment using the Dynaconf CLI1

$ dynaconf list

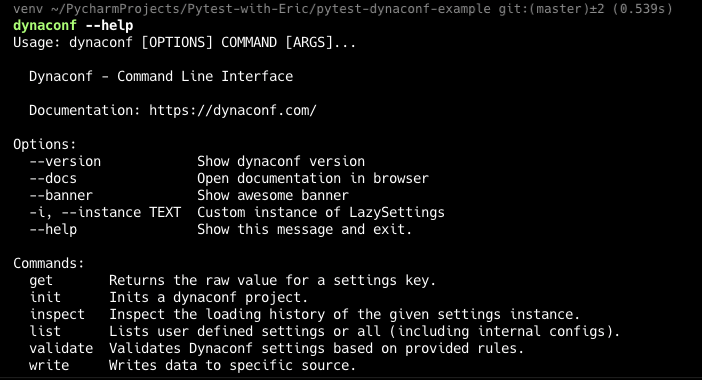

For a complete list of commands, run1

$ dynaconf --help

Important Behavior Note:

Dynaconf automatically chooses the development profile over default based on how the environment management system is designed.

This behavior happens because Dynaconf, by default, uses the following mechanism to determine which environment to load:

- Default Behavior: If no environment is explicitly specified, Dynaconf defaults to the

[default]environment. - Development Environment: Dynaconf automatically uses the

developmentenvironment if the environment is not explicitly set and if it is available in thesettings.tomlfile. - Priority of Development: If you have both

[default]and[development]in yoursettings.tomland you don’t explicitly specify an environment, Dynaconf will prioritize thedevelopmentenvironment.

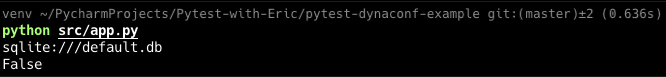

If you comment out the other 3 environments in the settings.toml file, you’ll see the below.

Switching Environment Settings In Dynaconf

To switch environments (profile), simply set the ENV_FOR_DYNACONF environment variable.1

$ export ENV_FOR_DYNACONF=testing

You can also include the environments=True config setting to manage this. See the docs here.

Now that you understand the basics of how to use Dynaconf, let’s learn how to use test settings while running tests, so we avoid accidentally running tests against the production database.

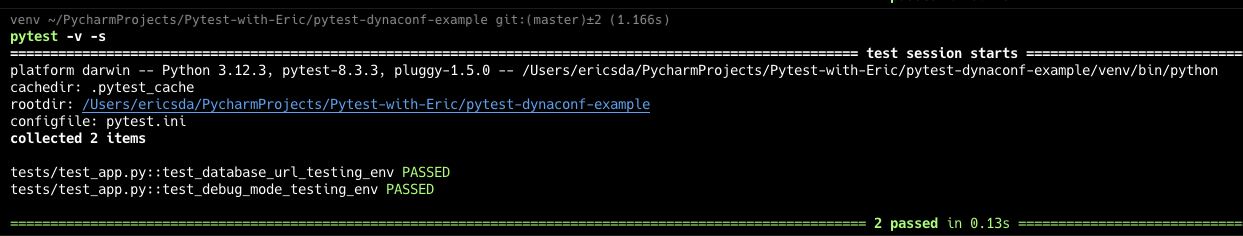

Using Dynaconf With Pytest (Running Tests)

Dynaconf makes this really easy as well.

Let’s define a simple fixture in our conftest.py file.

tests/conftest.py1

2

3

4

5

6

7

8import pytest

from dynaconf import settings

def set_test_settings():

"""Fixture to force the use of the 'testing' environment for all tests."""

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

Dynaconf offers the .configure method to which you can pass the FORCE_ENV_FOR_DYNACONF to automatically default to the testing profile for your entire test session.

This acts as a guardrail to ensure that you don’t accidentally write to a production database when running tests.

Let’s write a couple of tests and verify that this works.

tests/test_app.py1

2

3

4

5

6

7

8

9from src.app import get_database_url, is_debug_mode

def test_database_url_testing_env():

assert get_database_url() == "sqlite:///testing.db"

def test_debug_mode_testing_env():

assert is_debug_mode() is True

Voila!

Overwriting Dynaconf Variables for Testing in Python

With Dynaconf, you can easily overwrite configuration settings for testing by using the settings.configure() method.

This allows you to force the use of the “testing” environment during your test session, ensuring that all settings are loaded from the testing profile.

For example, in your Pytest setup, you can define a fixture to configure the environment:1

2

3

4

5

6

7import pytest

from dynaconf import settings

def set_test_settings():

"""Force the use of 'testing' environment for all tests."""

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

To override a specific environment variable, you can modify the configuration dynamically using the settings.set() method or environment variables.

Here’s an example.1

2

3

4

5

6

7

8

9

10

11

12

13

14import pytest

from dynaconf import settings

def set_test_settings():

# Force the use of the 'testing' environment

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

# Override a specific variable

settings.set("DATABASE_URL", "sqlite:///custom_test.db")

def test_database_url():

assert settings.DATABASE_URL == "sqlite:///custom_test.db"

In this example, we override the DATABASE_URL for the “testing” environment during a test.

Now that you’re comfortable with the basics, let’s move on to some big boy stuff — How to use Dynaconf with Flask and Django.

Using Dynaconf with Flask

Let’s see how to use Dynaconf with one of the most popular Python web frameworks — Flask!

Set up a Flask app in your src folder. Now folder structure is a subjective topic but for simplicity let’s work with this.

So our repo structure looks like this.1

2

3

4

5

6

7

8.

├── src

│ ├── flask_app

│ │ ├── __init__.py

│ │ ├── config.py

│ │ ├── routes.py

│ │ └── run.py

│ │ └── settings.toml

src/flask_app/settings.toml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19[default]

DEBUG = true

DATABASE_URL = "sqlite:///default.db"

SECRET_KEY = "default-secret-key"

[development]

DEBUG = true

DATABASE_URL = "sqlite:///development.db"

SECRET_KEY = "dev-secret-key"

[testing]

DEBUG = false

DATABASE_URL = "sqlite:///testing.db"

SECRET_KEY = "test-secret-key"

[production]

DEBUG = false

DATABASE_URL = "postgresql://user:password@localhost/prod_db"

SECRET_KEY = "prod-secret-key"

Next let’s set up the config file that reads these settings.

src/flask_app/config.py1

2

3

4

5

6from dynaconf import FlaskDynaconf

def init_app(app):

"""Initialize Dynaconf with Flask app."""

FlaskDynaconf(app, settings_files=["src/flask_app/settings.toml"])

Note the FlaskDynaconf class takes a flask app and settings file.

Let’s set up a simple route.

src/flask_app/routes.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15from flask import Blueprint, jsonify, current_app

main = Blueprint("main", __name__)

def index():

"""Basic route to show the current environment and database URL."""

return jsonify(

{

"environment": current_app.config["ENV_FOR_DYNACONF"],

"debug": current_app.config["DEBUG"],

"database_url": current_app.config["DATABASE_URL"],

}

)

The root route simply prints the config.

Now let’s create a factory app.

src/flask_app/__init__.py1

2

3

4

5

6

7

8

9

10

11

12

13

14from flask import Flask

from flask_app.routes import main

from dynaconf import FlaskDynaconf

def create_app():

"""Create and configure the Flask app."""

app = Flask(__name__)

FlaskDynaconf(app)

app.register_blueprint(main)

return app

Last but not least let’s create an instance of the app and run it.

src/flask_app/run.py1

2

3

4

5

6from flask_app import create_app

app = create_app()

if __name__ == "__main__":

app.run(host="0.0.0.0", port=5001)

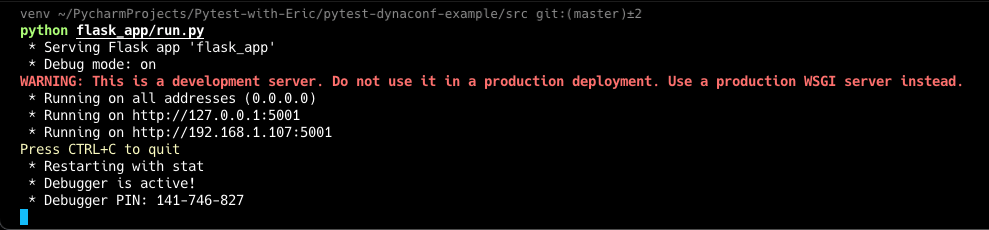

OK, time to fire up the Flask app and see if this works.

I’m running this from the src folder to make sure to add this to your PYTHONPATH if you haven’t already.1

$ export PYTHONPATH="$PYTHONPATH:."

Now run the app.1

$ python flask_app/run.py

You should see it start up like this.

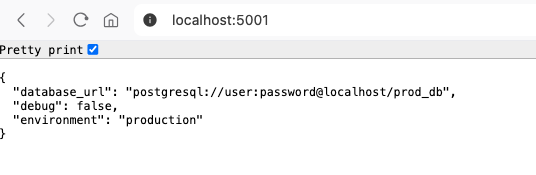

Navigate to your browser — http://localhost:5001/

You should see the response.

Let’s change the environment and see how this works.

Press CMD+C (CNTRL + C on Windows) to kill the app and set a different value for ENV_FOR_DYNACONF1

$ export ENV_FOR_DYNACONF=production

Run the app,1

$ python flask_app/run.py

And that’s it. As simple as that.

Let’s move on to something not as simple, doing the same with Django.

Using Dynaconf with Django

Make sure to install Django.

You can use the Django admin command to generate boilerplate code for you or just copy it from below.

The folder structure is similar to Flask.1

2

3

4

5

6

7

8

9

10

11.

├── src

│ ├── django_app

│ │ ├── __init__.py

│ │ ├── asgi.py

│ │ ├── settings.py

│ │ ├── urls.py

│ │ ├── views.py

│ │ └── wsgi.py

│ │ └── manage.py

│ │ └── settings.toml

I’m running these from the src folder again.1

$ django-admin startproject django_app

Here are the code files.

src/django_app/settings.toml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26[default]

DEBUG = true

SECRET_KEY = "default-secret-key"

ALLOWED_HOSTS = ["localhost", "127.0.0.1"] # Default allowed hosts

DATABASES.default.ENGINE = "django.db.backends.sqlite3"

DATABASES.default.NAME = "db.sqlite3"

[development]

DEBUG = true

ALLOWED_HOSTS = ["localhost", "127.0.0.1"]

[testing]

DEBUG = false

ALLOWED_HOSTS = ["localhost", "127.0.0.1"]

[production]

DEBUG = false

ALLOWED_HOSTS = [

"your-production-domain.com",

"www.your-production-domain.com",

] # Add your production domains

DATABASES.default.ENGINE = "django.db.backends.postgresql"

DATABASES.default.NAME = "prod_db"

DATABASES.default.USER = "user"

DATABASES.default.PASSWORD = "password"

DATABASES.default.HOST = "localhost"

I’ve included more settings here for example prod uses Postgres while default uses SQLite.

Here the next file which reads in our Dynaconf settings.

src/django_app/settings.py1

2

3

4

5import dynaconf # noqa

ROOT_URLCONF = "django_app.urls"

settings = dynaconf.DjangoDynaconf(__name__) # noqa

Then we have some boilerplate files

src/django_app/asgi.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

"""

ASGI config for django_app project.

It exposes the ASGI callable as a module-level variable named ``application``.

For more information on this file, see

https://docs.djangoproject.com/en/5.1/howto/deployment/asgi/

"""

import os

from django.core.asgi import get_asgi_application

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'django_app.settings')

application = get_asgi_application()

Next our `manage.py`

`src/django_app/manage.py`

#!/usr/bin/env python

"""Django's command-line utility for administrative tasks."""

import os

import sys

def main():

"""Run administrative tasks."""

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "django_app.settings")

try:

from django.core.management import execute_from_command_line

except ImportError as exc:

raise ImportError(

"Couldn't import Django. Are you sure it's installed and "

"available on your PYTHONPATH environment variable? Did you "

"forget to activate a virtual environment?"

) from exc

execute_from_command_line(sys.argv)

if __name__ == "__main__":

main()

Let’s define our view

src/django_app/views.py1

2

3

4

5

6

7

8

9

10

11

12

13from django.http import JsonResponse

from dynaconf import settings as dynaconf_settings

def index(request):

"""Display the current configuration values."""

return JsonResponse(

{

"environment": dynaconf_settings.current_env,

"debug": dynaconf_settings.DEBUG,

"database_url": dynaconf_settings.DATABASES["default"]["NAME"],

}

)

Our URL patterns

src/django_app/urls.py1

2

3

4

5

6from django.urls import path

from .views import index

urlpatterns = [

path("", index), # Route for the index view

]

And lastly the WSGI file

src/django_app/wsgi.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

"""

WSGI config for django_app project.

It exposes the WSGI callable as a module-level variable named ``application``.

For more information on this file, see

https://docs.djangoproject.com/en/5.1/howto/deployment/wsgi/

"""

import os

from django.core.wsgi import get_wsgi_application

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'django_app.settings')

application = get_wsgi_application()

Make sure to unset the Dynaconf env variables.1

2$ unset ENV_FOR_DYNACONF

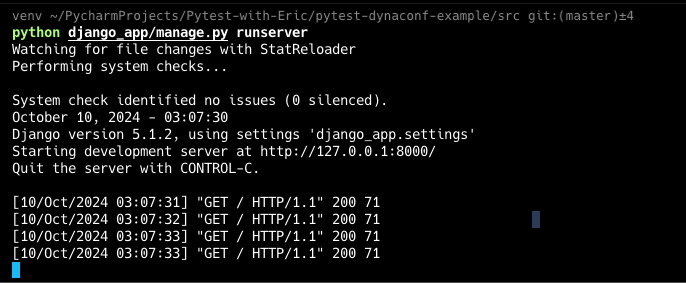

$ python django_app/manage.py runserver

You should see the app start up.

Navigate to http://127.0.0.1:8000/ and you’ll see the response.

Alternatively to ENV_FOR_DYNACONF , you can also set it using the DJANGO_ENV environment variable.

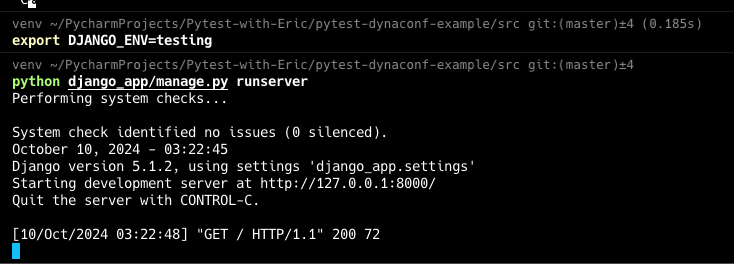

Let’s try that.1

$ export DJANGO_ENV=testing

Looks good.

If you decide to run it on production, make sure to have a Postgres running or switch to SQLite.

So that’s how you can use Django with Dynaconf.

Environment Variables with Dynaconf

Dynaconf simplifies config management by prioritizing environment variables, as recommended by the 12-factor app methodology.

This allows you to manage settings without relying on hardcoded values or configuration files.

To override any setting, simply export an environment variable with the DYNACONF_ prefix. For example:1

2$ export DYNACONF_NAME="Eric"

$ export DYNACONF_DEBUG=false

Dynaconf will automatically parse and convert these values into the appropriate data types (e.g., strings, booleans, lists, or dictionaries).

Additionally, you can customize prefixes or even load environment variables from a .env file using the load_dotenv option.

For custom prefixes,1

2

3from dynaconf import Dynaconf

settings = Dynaconf(envvar_prefix="ERIC")

You can now define your environment variables such as1

2$ export ERIC_USER=admin

$ export ERIC_PASSWD=1234

and so on.

For advanced use cases, Dynaconf supports lazy values, custom data types, and integration with external services like Redis or Vault for secure configuration management.

This flexibility makes it a powerful tool for handling environment-specific configurations effortlessly.

Managing Secrets with Dynaconf

Perhaps you’re wondering, environment variables are fine and work great, but what about security?

What if you need to pass API keys, or database credentials to the app which are extremely common practices? Do you expose those as environment variables?

Of course not.

One way is to store secrets via an External Secrets Manager like AWS Secrets Manager, Azure Key Vault or Vault and access them via API calls.

Unfortunately this adds a bit of latency which may not be acceptable to some applications.

Dynaconf offers several secure and flexible ways to manage secrets across environments.

Below are some key methods:

1. Using .secrets Files

Dynaconf supports storing sensitive data in a .secrets file (e.g., .secrets.toml, .secrets.yaml, etc.).

This file can contain secret values like passwords and API keys, and can be excluded from version control (e.g., by adding .secrets.* to .gitignore) to prevent accidental exposure in public repositories.

Example .secrets.toml:1

2password = "super-secret-password"

api_key = "1234567890abcdef"

This method is ideal for local development, as it keeps sensitive data separate from your main configuration files.

2. Vault Integration

For more secure storage, Dynaconf can load secrets from a Vault server (e.g., HashiCorp Vault).

By using Vault, secrets are stored securely and can be dynamically retrieved by your application without hardcoding them.

To use Vault with Dynaconf:

- Set up a Vault server (locally or via Docker).

- Install Dynaconf with Vault support:

pip install dynaconf[vault] - Configure Vault in your

.envor environment variables

VAULT_ENABLED_FOR_DYNACONF=true

VAULT_URL_FOR_DYNACONF=”http://localhost:8200“

VAULT_TOKEN_FOR_DYNACONF=”your-root-token”

Once configured, Dynaconf will read secrets directly from Vault, keeping your sensitive data secure and easy to manage across environments.

3. Redis for Secrets Storage

In scenarios where you need to distribute secrets across multiple instances or update them without redeploying the application, Redis can be used as a centralized store for settings and secrets.

To use Redis:

- Set up Redis via Docker:

docker run -d -p 6379:6379 redis - Install Dynaconf with Redis support:

pip install dynaconf[redis] - Configure Redis in your

.envor environment variables:

1 | REDIS_ENABLED_FOR_DYNACONF=true |

Secrets can now be stored and accessed from Redis, ensuring they are centrally managed and securely distributed across all instances of your application.

Storing sensitive data in Redis should be done carefully with proper access controls to avoid unauthorized access.

4. Using .env Files

For simple use cases, Dynaconf can load environment variables from a .env file.

This file can hold your secrets in plain text, which is then loaded by Dynaconf when the application starts. To enable .env file loading, use:1

2

3from dynaconf import Dynaconf

settings = Dynaconf(load_dotenv=True)

Example .env file:1

2DYNACONF_API_KEY=12345abcdef

DYNACONF_DB_PASSWORD=supersecurepassword

Make sure to exclude .env or secret files from version control.

Armed with all this Dynaconf knowledge, let’s look at some commonly asked questions.

Frequently Asked Questions about Dynaconf

To save you time, here are responses to some common questions, taken from the official Dynaconf documentation.

How can I dynamically set the path for settings files across multiple environments?

If you need to set different settings files for different environments, you can do it in two ways:

- Directly in your Dynaconf instance:

Dynaconf(settings_file="path/to/settings.toml") - Via environment variables:

export SETTINGS_FILE_FOR_DYNACONF="path/to/settings"

Environment-specific settings will override instance-level settings.

Why does running tests in my IDE throw an “OSError: Starting path not found,” but it works in CLI?

This issue arises because Dynaconf relies on the current working directory (CWD).

Some IDEs, like PyCharm, may change the CWD when running tests, leading to this error.

Fix it by ensuring the working directory in your IDE’s test configuration points to your project’s root.

How do I force Dynaconf to use a specific environment for all tests in Pytest?

You can create a Pytest fixture to configure Dynaconf to use a specific environment, such as “testing,” for all tests.

This ensures that every test runs with the same environment settings.1

2

3

4

5

6

7import pytest

from dynaconf import settings

def set_test_settings():

"""Force the use of the 'testing' environment for all tests."""

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

How do I test different configurations in Dynaconf without monkeypatch?

You can configure Dynaconf settings within the test itself or use Pytest fixtures to manage different configurations.

Use settings.configure() to dynamically set different configurations in each test.1

2

3

4

5

6

7def test_different_env():

settings.configure(FORCE_ENV_FOR_DYNACONF="development")

assert settings.ENV == "development"

def test_production_env():

settings.configure(FORCE_ENV_FOR_DYNACONF="production")

assert settings.ENV == "production"

How can I validate Dynaconf settings across all tests?

You can create a fixture that sets the environment and uses validators to ensure your settings are properly configured.

Validators can be checked after settings.configure() is called in your tests.1

2

3

4

5

6

7

8

9

10

11import pytest

from dynaconf import Validator

def validate_test_settings():

settings.validators.register(Validator("DB_HOST", must_exist=True))

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

settings.validators.validate()

def test_db_host():

assert settings.DB_HOST == "localhost"

Can I change Dynaconf settings dynamically between different tests?

Yes, you can change Dynaconf settings between tests by calling settings.configure() within each test.

This allows you to run tests under different environments or configurations without using monkeypatch.1

2

3

4

5

6

7def test_with_testing_env():

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

assert settings.DB_HOST == "localhost"

def test_with_production_env():

settings.configure(FORCE_ENV_FOR_DYNACONF="production")

assert settings.DB_HOST == "prod-db-host"

How do I configure Dynaconf settings globally across all tests in a session?

You can create a session-scoped fixture that applies the required settings across all tests in the suite. This avoids having to configure the environment in each test manually.1

2

3

4

def configure_global_settings():

"""Globally configure settings for the entire test session."""

settings.configure(FORCE_ENV_FOR_DYNACONF="testing")

By using settings.configure() within your tests and Pytest fixtures, you can efficiently manage Dynaconf settings without relying on monkeypatch, ensuring isolation and consistency across different environments.

Best Practices for Using and Testing Config Files in Python

Here is a compilation of best practices from my experience and The Twelve-Factor App.

- Separate Config from Code: Store all configurations, especially sensitive data like API keys or database credentials, in environment variables or external configuration files (not in code). This ensures your app can be safely deployed across multiple environments without exposing sensitive details.

- Use Environment-Specific Configurations: For each environment (development, testing, production), maintain isolated configuration files or settings, leveraging tools like Dynaconf for easy management. This keeps settings consistent and prevents configuration conflicts.

- Leverage Environment Variables: Store key settings in environment variables to make switching between environments seamless. This method is platform-agnostic and ensures flexibility across deployments.

- Utilize

.secretsFiles: Keep sensitive information like passwords and API tokens in.secretsfiles or secure vaults, and exclude them from version control to protect against accidental exposure. - Test with Isolated Configurations: Ensure your tests use environment-specific configurations by using fixtures or configuring settings dynamically. This isolation prevents cross-test interference and ensures accurate test results.

- Validate and Reload Configurations: Use validators to ensure the presence and correctness of critical settings, and always reload configurations after making dynamic changes in tests to apply updates correctly.

- Version Control Best Practices: Keep configuration templates (e.g.,

.env.example) in version control for easier setup but exclude sensitive files. This ensures a smooth onboarding process while maintaining security. - Use a Consistent Configuration Format: Stick to a single format like TOML, YAML, or JSON across your project to simplify parsing and validation.

Conclusion

In this article, you learned how to effectively manage configurations in Python using Dynaconf including overwriting settings for tests.

We explored why Dynaconf is a powerful tool for handling environment-specific settings to maintain isolation and security.

You now know how to configure Dynaconf for your tests with Pytest fixtures, dynamically switch environments, and handle sensitive secrets securely.

Whether you’re working with Flask, Django, or any other Python framework, you can now confidently manage configurations without risking accidental production contamination.

Now it’s time to take action!

Try using Dynaconf in your own projects and experience how it simplifies your configuration management.

If you have any questions or would like to share your experience, feel free to get in touch. I’d love to hear how it’s working for you!

Cheers!