How To Measure And Improve Test Coverage With Poetry And Pytest

Are you using Poetry to manage your Python projects but unsure how to generate coverage reports?

Or perhaps you’re wondering about the difference between test coverage and branch coverage, and how each affects code and test quality.

What about configuring coverage reports to run seamlessly in your CI/CD pipeline?

This article will answer all your coverage questions and more.

You’ll learn how to effectively run and generate coverage reports using Poetry, a powerful package and dependency manager.

We’ll dive into the differences between test coverage and branch coverage, explore various report formats like HTML, terminal, and JSON, and show you how to tailor your coverage setup to meet your specific needs.

Finally, we’ll walk through integrating coverage reporting with GitHub Actions to automate report generation and display coverage badges in your repository. We’ll also introduce a cool popular Pytest plugin.

By the end, you’ll be fully equipped to incorporate test coverage into your development workflow. Let’s get started!

What is Poetry?

Poetry is a powerful package and dependency manager for Python, offering significant advantages over traditional tools like Pip, Pipenv, or even Conda.

Unlike these tools, Poetry makes dependency management easy by using the pyproject.toml file, which neatly handles project configuration, dependencies, and build settings in one place.

One of its best features is its lock file, which ensures consistent dependency resolution by carefully managing upgrades to patch or minor versions.

This guarantees a more predictable and stable environment for your projects.

If you’re new to Poetry, I recommend checking out our detailed guide on How to Run Pytest with Poetry.

Now that we’ve got the basics of Poetry, let’s dive into coverage reports — what they are and why they play a crucial role in maintaining code quality.

What are Coverage Reports?

Coverage reports provide insight into how much of your codebase is executed by tests, expressed as a percentage.

They measure the portion of your code that is run during tests, but it’s important to note that this is not an indicator of code quality or how bug-free it is.

While some may view coverage as a vanity metric, I believe it’s valuable.

Coverage reports help you think critically about potential test cases and edge scenarios — particularly when it comes to branch coverage, which we’ll explore later.

Pairing coverage reports with tools like Hypothesis for property-based testing can further strengthen your testing strategy.

We’ve touched on coverage reporting in past articles, but here we’ll dive deeper to highlight which aspects are truly beneficial and worth your focus.

The most widely-used tool for Python is the coverage.py library, with plugins like pytest-cov that seamlessly integrate with Pytest to extend its capabilities.

For those interested in how the coverage library works, I highly recommend checking this out.

There’s a lot more to talk about but for now, let’s cut to the chase and learn how to generate coverage reports with Poetry and coverage.py.

How To Generate Test Coverage Reports With Poetry

Let’s start with some example code to get a feel for the application.

I’ve kept it very simple so we can focus on the coverage side of things.

Step 1 — Install Poetry if you haven’t already.

Step 2 — Set your environment to the latest version of Python — In this example, we’ve used 3.12.3 (set using Pyenv).

Step 3— Clone the example code repo here, or create your own and run poetry init to generate your pyproject.toml file.

Step 4 — Run poetry install to create a virtual environment and install dependencies. In this case, we have pytest which we’ve added under the test dependencies group.

Step 5 — You can add new packages using poetry add requests and tighten it up with version numbers.

Example Code

Our example code is a simple MathOperation class.

src/math_operations.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30class MathOperations:

def add(self, a, b):

return a + b

def subtract(self, a, b):

return a - b

def multiply(self, a, b):

return a * b

def divide(self, a, b):

if b == 0:

raise ValueError("Cannot divide by zero.")

return a / b

def power(self, a, b):

return a**b

def square_root(self, a):

if a < 0:

raise ValueError("Cannot calculate square root of negative number.")

return a**0.5

def factorial(self, a):

if a < 0:

raise ValueError("Cannot calculate factorial of negative number.")

if a == 0:

return 1

return a * self.factorial(a - 1)

Let’s write some tests.

Tests

tests/test_math_operation.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40import pytest

from src.math_operations import MathOperations

def math_ops():

return MathOperations()

def test_add(math_ops):

assert math_ops.add(10, 5) == 15

assert math_ops.add(-1, 1) == 0

def test_subtract(math_ops):

assert math_ops.subtract(10, 5) == 5

assert math_ops.subtract(5, 10) == -5

def test_multiply(math_ops):

assert math_ops.multiply(3, 4) == 12

assert math_ops.multiply(0, 5) == 0

def test_divide(math_ops):

assert math_ops.divide(10, 2) == 5

def test_power(math_ops):

assert math_ops.power(2, 3) == 8

assert math_ops.power(5, 0) == 1

def test_square_root(math_ops):

assert math_ops.square_root(9) == 3

def test_factorial(math_ops):

assert math_ops.factorial(5) == 120

assert math_ops.factorial(0) == 1

Now we think our application and tests are good enough.

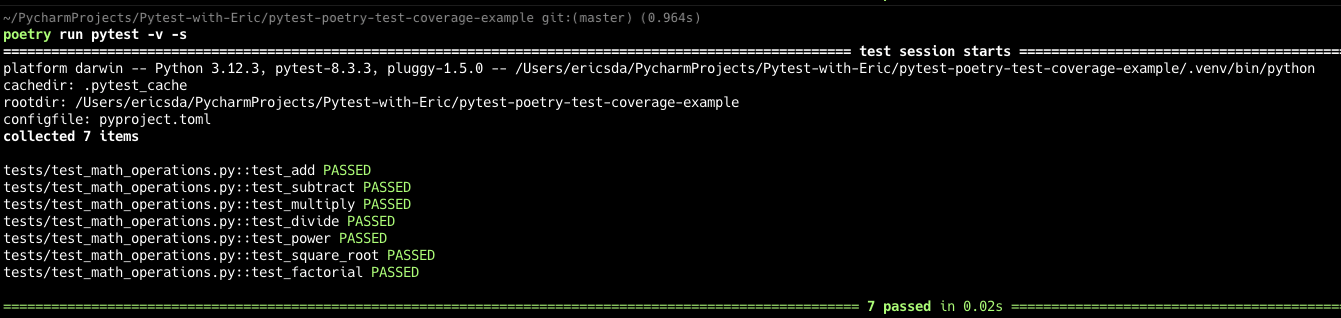

Before checking any coverage let’s run our tests to make sure it works.1

poetry run pytest -v -s

Note — I prefer to run commands with poetry from outside the shell but you can also run $ poetry shell and then run pytest like you normally would.

Great, now let’s check the coverage.

Install Coverage.py

Let’s go ahead and install coverage.py.

It’s a simple pip command or with Poetry you can run1

poetry add -G dev coverage

Which will add the coverage package to the dev group and install it into your virtual environment.

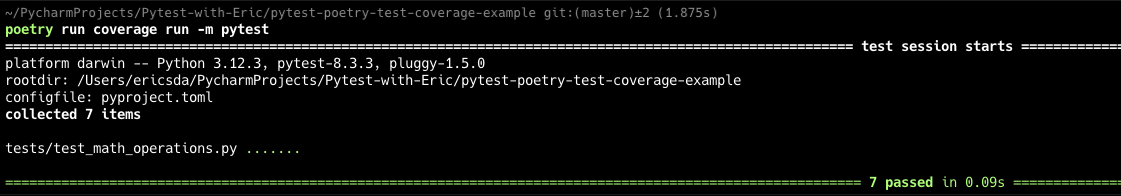

Run Coverage Report

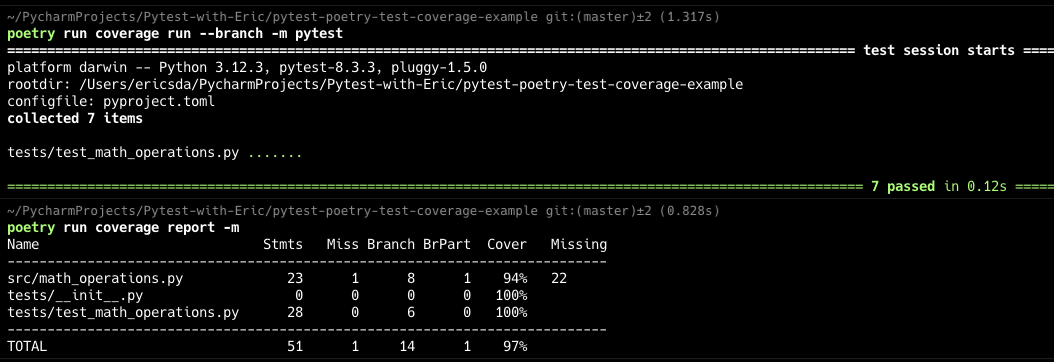

You can run the below command to run your test suite and gather coverage data.1

poetry run coverage run -m pytest arg1 arg2 arg3

If you’re within the poetry shell you can simply run1

coverage run -m pytest arg1 arg2 arg3

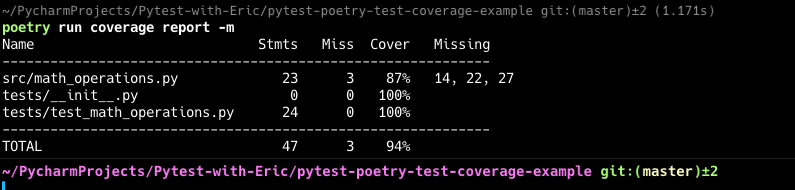

You can report on the results within the terminal using the command1

poetry run coverage report -m

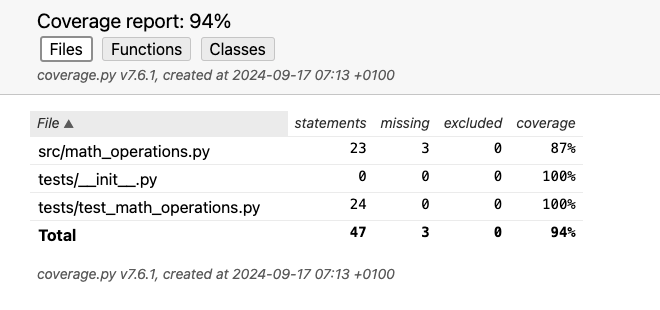

94%!

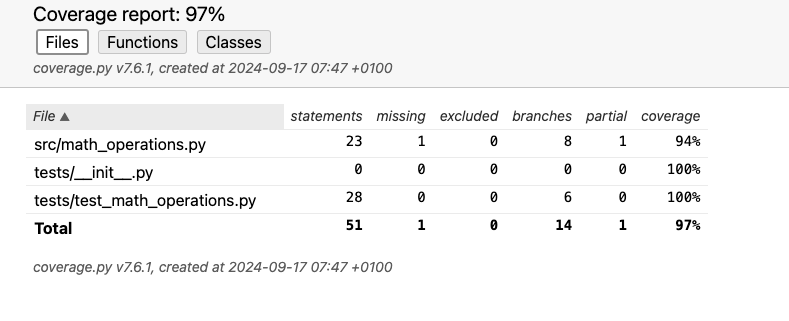

Decent but what’s not tested? Let’s take a look at the HTML report.

To generate an HTML report you can run1

poetry run coverage html

This will generate an HTML file into the default directory — htmlcov/index.html . You can override it.

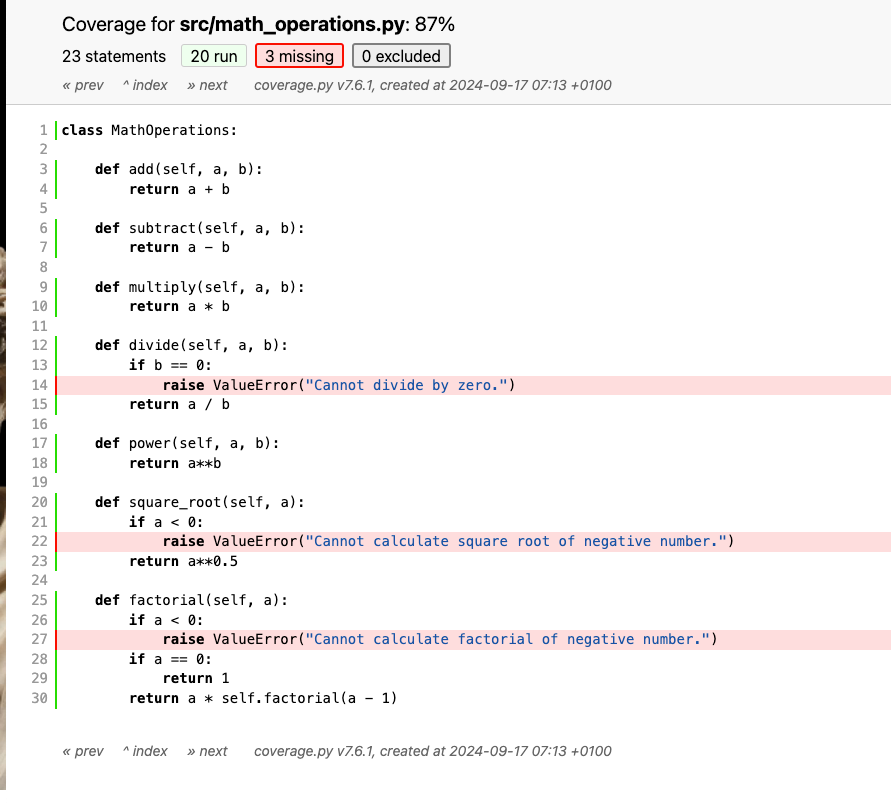

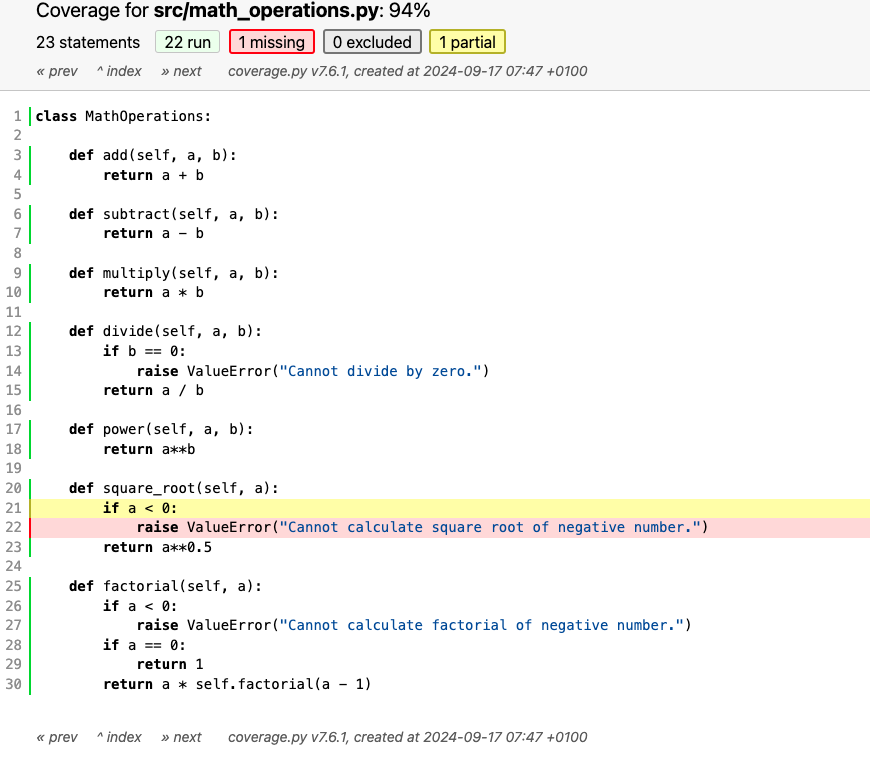

You can see that we haven’t tested the exceptions.

So let’s write some tests for those. Refactoring our tests,

tests/test_math_operation.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21def test_divide(math_ops):

assert math_ops.divide(10, 2) == 5

with pytest.raises(ValueError, match="Cannot divide by zero."):

math_ops.divide(10, 0)

def test_square_root(math_ops):

assert math_ops.square_root(9) == 3

with pytest.raises(

ValueError, match="Cannot calculate square root of negative number."

):

math_ops.square_root(-1)

def test_factorial(math_ops):

assert math_ops.factorial(5) == 120

assert math_ops.factorial(0) == 1

with pytest.raises(

ValueError, match="Cannot calculate factorial of negative number."

):

math_ops.factorial(-1)

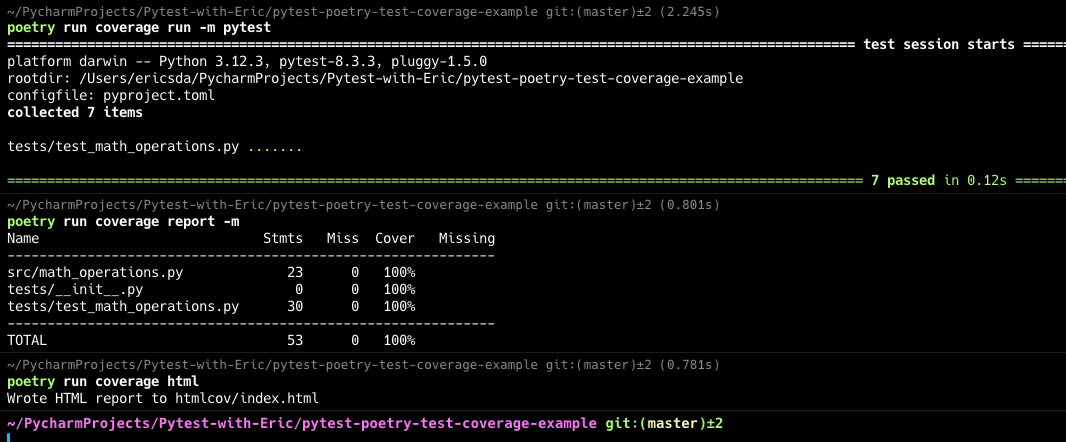

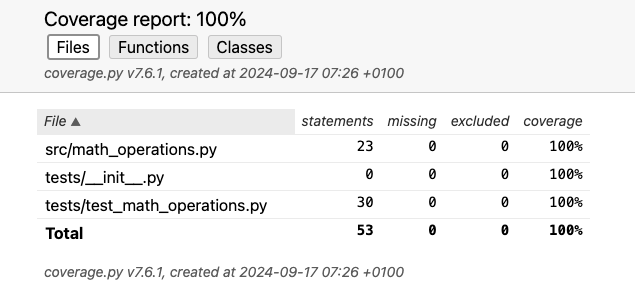

Great — 100% coverage.

This is the bare bones of how to use a coverage report.

Let’s look at some more advanced concepts.

Line Coverage vs Branch Coverage

Line coverage measures the percentage of code lines executed by your tests, while branch coverage tracks whether all possible paths (branches) of conditional statements are tested.

Line coverage alone can miss critical paths, which is why branch coverage provides a more comprehensive view of your tests.1

2

3

4

5def is_positive(n):

if n > 0:

return True

else:

return False

A test with line coverage might execute both the if and else blocks, but branch coverage ensures that both outcomes (n > 0 and n <= 0) are tested, covering all paths through the if-else statement.

To test how this works let’s modify one of the branch tests — remove the test for negative square root.1

2def test_square_root(math_ops):

assert math_ops.square_root(9) == 3

You can generate branch coverage using the --branch flag, which ensures all paths of conditional statements are covered.1

poetry run coverage run --branch -m pytest

The HTML report shows us which branch of the source code was not tested.

This is valuable information and allows you to visualize branches of your code that haven’t been well-tested.

Including and Excluding Files From Coverage

While coverage is great, it’s important to understand its design.

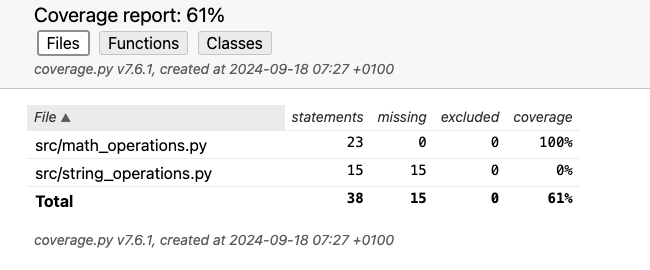

By default, coverage.py only reports on files that are imported or executed during testing, so any unimported files will not be included in the report unless you specify them using the --source option.

So if you or your colleague has bits of code that aren’t tested, that’s not going to show up in your coverage report (by default).

The good news is you can change this behavior.

By specifying the source directory you can tell coverage.py to look at all files in this directory and check whether it’s being tested.

Let’s see how this works in practice.

Here’s a second module — StringOperations.

src/string_operations.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22class StringOperations:

def capitalize(self, text: str) -> str:

return text.capitalize()

def title(self, text: str) -> str:

return text.title()

def upper(self, text: str) -> str:

return text.upper()

def lower(self, text: str) -> str:

return text.lower()

def swapcase(self, text: str) -> str:

return text.swapcase()

def reverse(self, text: str) -> str:

return text[::-1]

def strip(self, text: str) -> str:

return text.strip()

Perhaps your new colleague wrote this module but didn’t write tests yet. How would you know that this module doesn’t have tests?

Besides the obvious fact that you can check, this is hard to do when you have a large code base with 10s of modules.

Solution — Use the --source flag to tell coverage.py which directory to use as a source.1

2poetry run coverage run --source=./src -m pytest

poetry run coverage html

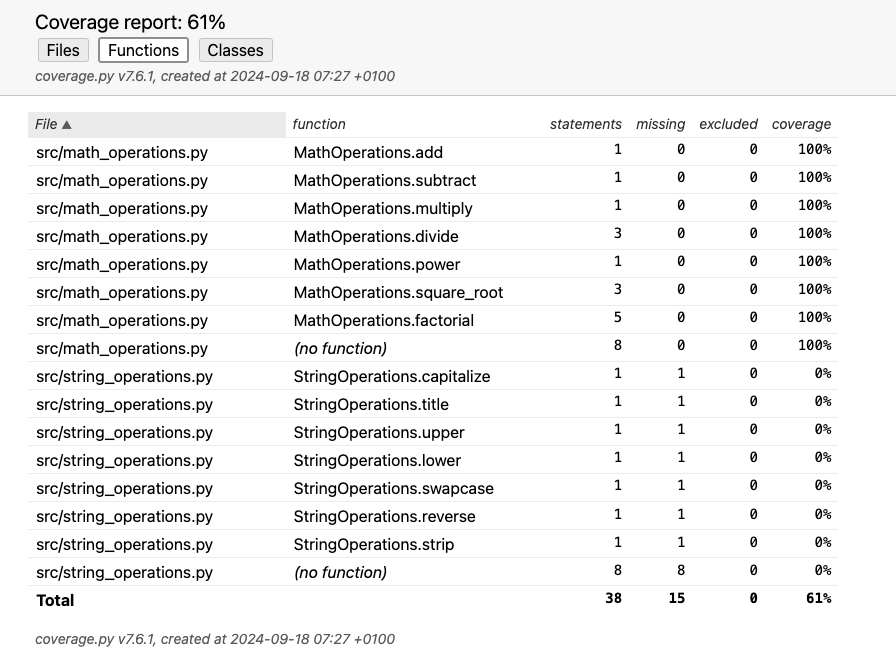

Now shows us a coverage of 61% with a clear indication that src/string_operations.py is not tested.

This allows you to ensure all modules in a source code folder have been tested.

Excluding Files From Coverage

On the other side of the spectrum is exclusion.

Maybe you have legacy code that’s no longer used or debugging code.

Whatever your reason, the makers of coverage.py have thought about this and included several ways to do this.

The easiest way to do this is to add a # pragma: no cover comment to the code.

You can use # pragma: no cover at various levels: individual lines, functions, or entire classes, depending on the scope of exclusion.

For example, let’s say the StringOperations module is legacy code and is no longer used so there’s no need to include it in coverage reports.

Adding the comment at a class level excludes the entire class from test coverage.1

class StringOperations: # pragma: no cover

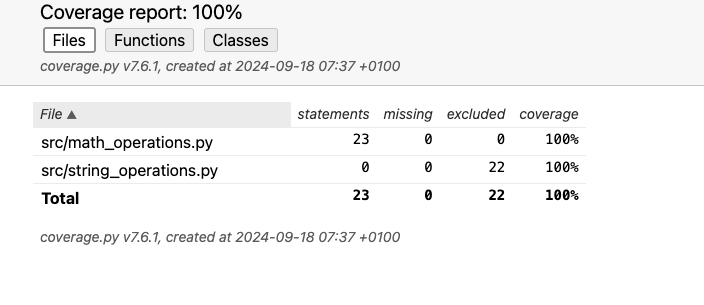

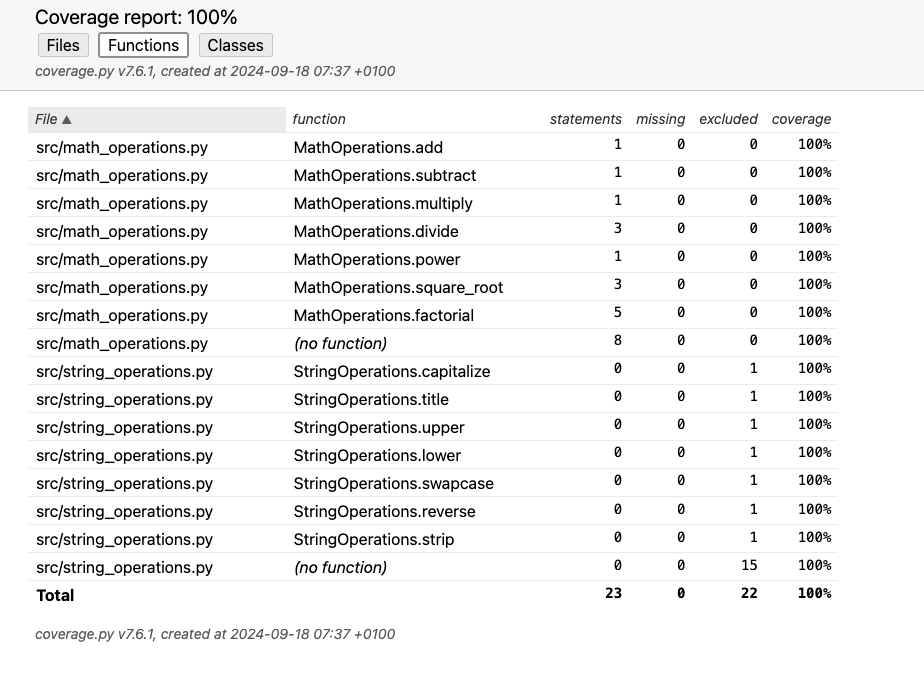

The coverage report now looks like this — the StringOperations module is marked as excluded.

You can read more about file exclusion and how to define regex patterns here.

Configuring Coverage Reports with Config Files

Like me, perhaps you don’t enjoy piling on CLI commands every time you want to run and generate coverage.

So how can you configure coverage.py to suit your needs.

The solution — is to add the commands to your config file — pyproject.toml .

Pytest supports most config files:

pytest.inipyproject.tomlsetup.cfgtox.ini

Since this article is about Poetry and pyproject.toml , let’s add the coverge settings into this file.

pyproject.toml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32[tool.poetry]

name = "pytest-poetry-test-coverage-example"

version = "0.1.0"

description = ""

authors = ["Your Name <you@example.com>"]

readme = "README.md"

[tool.poetry.dependencies]

python = "^3.12"

[tool.poetry.group.dev.dependencies]

pytest = "^8.3.3"

coverage = "^7.6.1"

[tool.coverage.run]

branch = true

source = ["src"]

dynamic_context = "test_function"

[tool.coverage.report]

show_missing = true

fail_under = 80

[tool.coverage.html]

directory = "htmlcov"

[build-system]

requires = ["poetry-core"]

build-backend = "poetry.core.masonry.api"

We’ve included some settings under the [tool.coverage.*] groups.

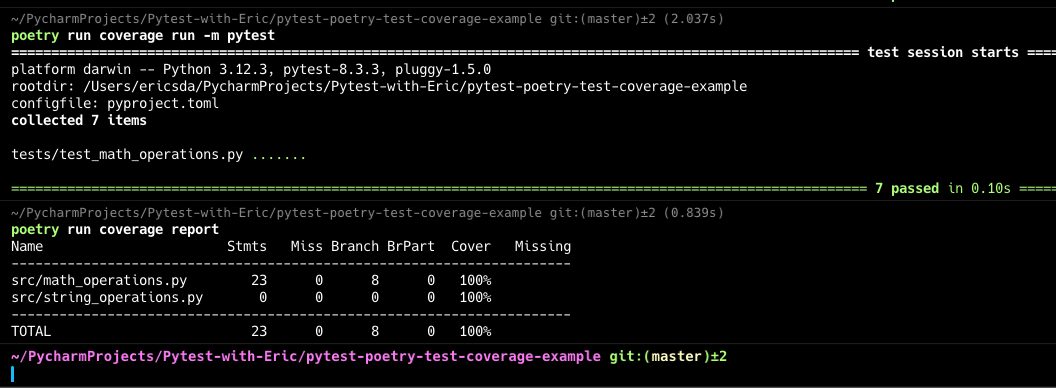

Now you only need to run1

2poetry run coverage run -m pytest

poetry run coverage report

This gives you branch coverage and obeys all the settings you’ve defined.

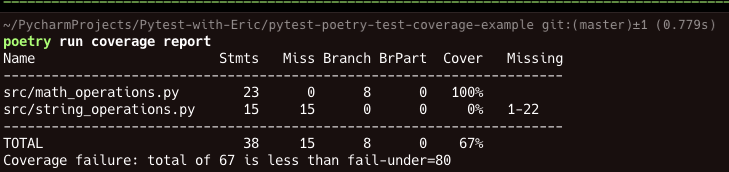

If you notice, there’s a fail_under setting. What’s that about?

How To Fail Coverage Below X % ?

Coverage.py has a neat feature that causes a Coverage Failure below a certain threshold.

Note that fail_under will fail the test run if the total coverage falls below the specified threshold, but it doesn’t provide individual thresholds for specific files or modules.

In the above config, we specified to raise a Coverage failure if coverage drops below 80%.

Let’s try this out.

Removing the # pragma: no cover comment from the string_operations.py module.

While some may argue this is vanity, it’s still a useful feature to have and important to know.

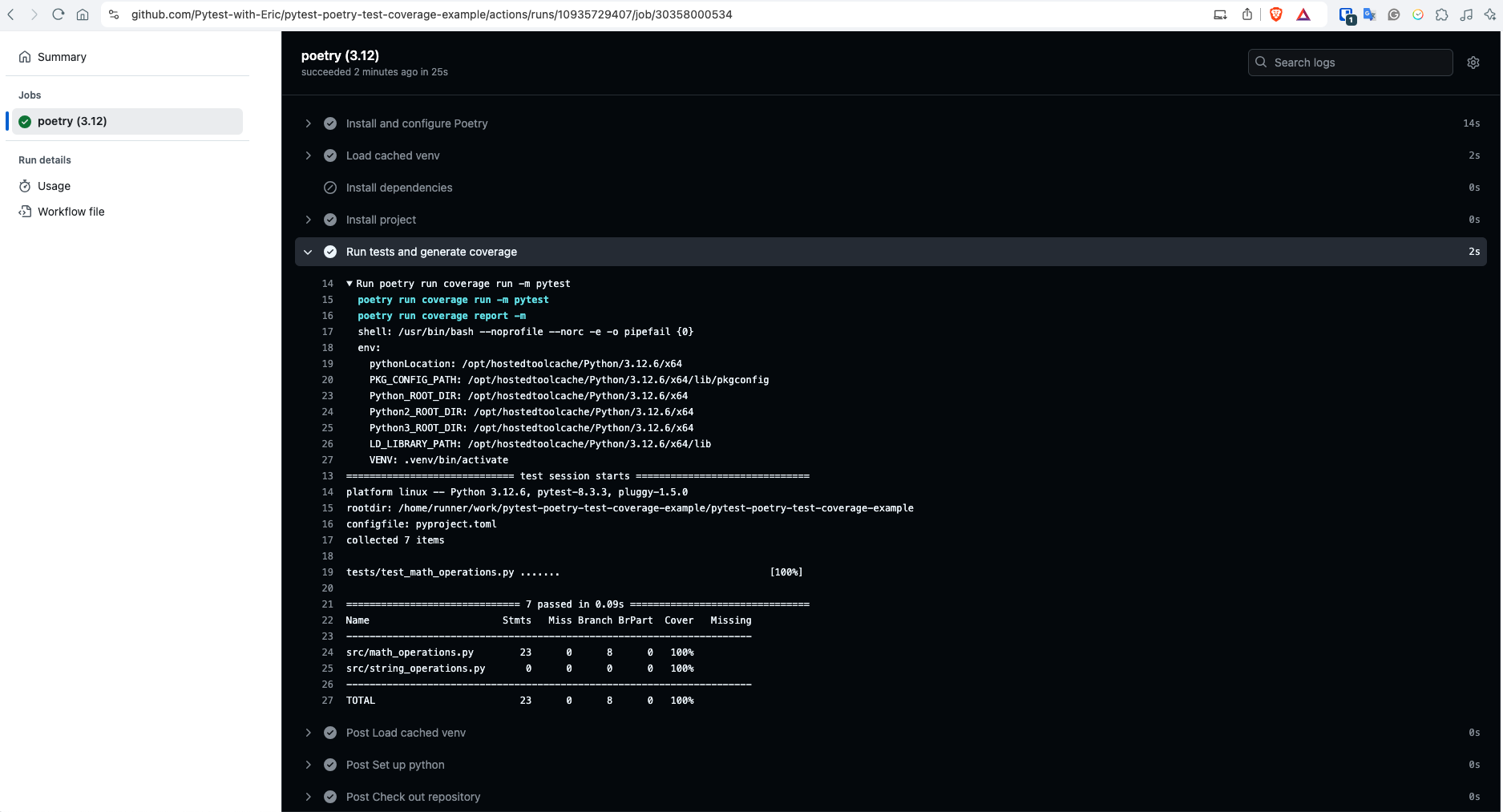

Generate Coverage Report via CI/CD (GitHub Actions)

Coverage reports are helpful, but you know what’s even more helpful?

Generating them automatically as part of your CI/CD pipeline.

You can do all sorts of complex automations but let’s see how to generate coverage reports automatically via GitHub Actions.

A simple workflow looks like this

.github/workflows/run_test_coverage.yml1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67name: Coverage CI

on: [push, pull_request]

jobs:

poetry:

runs-on: ubuntu-latest

defaults:

run:

shell: bash

strategy:

max-parallel: 42 # specify the maximum number of jobs that can run concurrently

fail-fast: false

matrix:

python-version: ["3.12"]

steps:

#----------------------------------------------

# check-out repo and set-up python

#----------------------------------------------

- name: Check out repository

uses: actions/checkout@v3

- name: Set up python

id: setup-python

uses: actions/setup-python@v4

with:

python-version: ${{ matrix.python-version }}

#----------------------------------------------

# install & configure poetry

#----------------------------------------------

- name: Install and configure Poetry

uses: snok/install-poetry@v1

with:

virtualenvs-create: true

virtualenvs-in-project: true

#----------------------------------------------

# load cached venv if cache exists

#----------------------------------------------

- name: Load cached venv

id: cached-poetry-dependencies

uses: actions/cache@v3

with:

path: .venv

key: venv-${{ runner.os }}-${{ steps.setup-python.outputs.python-version }}-${{ hashFiles('**/poetry.lock') }}

#----------------------------------------------

# install dependencies if cache does not exist

#----------------------------------------------

- name: Install dependencies

if: steps.cached-poetry-dependencies.outputs.cache-hit != 'true'

run: poetry install --verbose --no-interaction

#----------------------------------------------

# install your root project, if required

#----------------------------------------------

- name: Install project

run: poetry install --verbose --no-interaction

#----------------------------------------------

# Test coverage

#----------------------------------------------

- name: Run tests and generate coverage

run: |

poetry run coverage run -m pytest

poetry run coverage report -m

You could do more important stuff like upload the coverage report to Codecov, host it as a static file in an S3 bucket or via GitHub pages. The world is your oyster.

Showing Coverage % badges on GitHub

If you’re looking to generate one of those fancy green badges that show code coverage, I’d recommend a tool like Genbadge which is pretty awesome and easy to use.

You can generate cool badges for # of passing/failing tests, coverage %, and more.

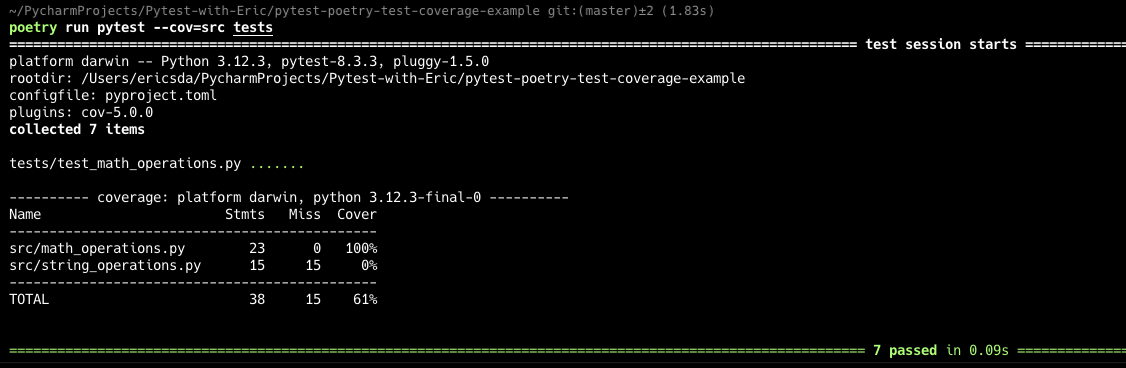

Plugins — Pytest-Cov

pytest-cov is a nice wrapper plugin that integrates the popular coverage.py tool directly into pytest, making it easier to measure test coverage.

While coverage.py requires manual configuration and separate commands, pytest-cov simplifies this by allowing you to use a single pytest command for both testing and coverage reporting.

Most if not all of the features of coverage.py as supported.

Some benefits include

- Subprocess support: You can run code in a subprocess, which will still be included in the coverage report without any extra work.

- Xdist support: You can use pytest-xdist to run tests in parallel, and coverage will still work correctly. This is especially useful for projects that rely on multiprocessing, forking, or distributed test execution, ensuring all code paths are accounted for in the coverage report.

Consistent pytest behavior: When using pytest-cov, the coverage results will be consistent with normal pytest runs, unlike usingcoverage run -m pytest, which may slightly change how the system paths are handled.

Here’s a quick start.

Install the pytest-cov package1

poetry add -G dev pytest-cov

Comment out the coverage.py settings in pyproject.toml so it doesn’t interfere with pytest-cov.

Run1

poetry run pytest --cov=src tests

You can also generate HTML reports or other tasks as the coverage.py library allows.1

poetry run pytest --cov=src tests --cov-report html

The report looks pretty much the same but the good thing is you don’t have to manage coverage.py settings separately.

You can simply manage all your Pytest config via the addopts parameter etc. in one Pytest config file.

Conclusion

I hope this article helped improve your knowledge of test coverage.

We first started with how to generate test coverage using Poetry as our dependency manager — with example code and the coverage.py package.

Then we looked at how to configure it — generate terminal or HTML reports, include/exclude code from coverage, branch vs test coverage and even how to fail when under a coverage threshold.

Lastly, you learned how to generate coverage as part of a CI/CD pipeline (with GitHub Actions) and about a cool plugin — pytest-cov.

I highly recommend you play around with coverage — it will help you form your own opinion and decide whether it’s a useful tool for your team (or not).

If you have any ideas for improvement or like me to cover any topics please message via Twitter, GitHub or Email.

Till the next time… Cheers!

Additional Reading

- Example Code GitHub Repo

- Coverage.py - Official Documentation

- Poetry - Official Documentation

- Pytest-Cov - Official Documentation

- Genbadge - Generate Badges for Your GitHub Repository

- Pytest Config Files - A Practical Guide To Good Config Management

- How to Use Hypothesis and Pytest for Robust Property-Based Testing in Python

- How To Run Pytest With Poetry (A Step-by-Step Guide)

- Parallel Testing Made Easy With pytest-xdist

- How To Use Pytest With Command Line Options (Easy To Follow Guide)

- How To Generate Beautiful & Comprehensive Pytest Code Coverage Reports (With Example)

- Demo of a Python Poetry project using the coverage tool

- FailedToFunction - Test Coverage with Pytest