A Beginner's Guide To `pytest_generate_tests` (Explained With 2 Examples)

As a software developer, you’re no stranger to the importance of effective testing strategies, especially Test-Driven Development (TDD).

As projects become complex, so do the testing requirements, leading to repetitive and time-consuming test case creation. Is there a way to simplify test generation?

Fortunately, a game-changing solution exists!

Enter pytest-generate-tests, a powerful Pytest plugin that promises to revolutionize the way you write tests.

This powerful tool allows you to streamline your testing efforts and eliminate redundant code while achieving comprehensive test coverage.

While parameterized testing can help produce tests with varying input data, it has its limitations. For example, it can be challenging to generate test cases with complex logic or extensive data manipulation.

This is where pytest-generate-tests step in, offering a versatile and comprehensive approach beyond what parameterized testing can achieve.

In this guide, we’ll delve deep into the world of pytest-generate-tests and explore how it empowers you to conduct parameterized testing beyond defining simple parameter markers.

You’ll learn about dynamic test generation and how pytest-generate-tests can be applied to solve common testing challenges.

Let’s dive right in!

What You’ll Learn

By the end of this tutorial, you will

- Learn how to set up a project to use

pytest_generate_tests. - Explore the basics of

pytest_generate_testsand its role in Pytest. - Discover real-world applications and use cases for dynamic test generation.

- Compare

pytest_mark_parametrizeandpytest_generate_teststo choose the right approach for your testing needs. - Have the ability to make informed testing strategy choices.

Before diving into some code examples, let’s look at what parametrized testing is.

Parameterized Testing

Imagine effortlessly running the same tests with numerous input data sets, saving you precious time and ensuring that your code behaves consistently across various scenarios.

This wizardry is made possible through Pytest’s parameterized testing!

In fact, it’s like writing a single test and then cloning it to check every corner case of your code.

Pytest’s parameterized testing allows you to dynamically generate test cases based on a predefined set of input data.

This approach is especially useful when you need to test a function or method with multiple input values.

An example may look like this1

2

3

4

5

6

7

8# Test Math Functions

)

def test_addition(a, b, expected):

assert addition(a, b) == expected

In this case, a, b and expected are the parameters that are passed to the test function, taking on values from the list of tuples.

Let’s visit another fascinating topic, property-based testing.

What Is Property Based Testing

Property-based testing is a complementary approach to traditional unit testing, where test cases are generated based on properties or constraints that the code should satisfy.

Hypothesis addresses this limitation by automatically generating test data based on specified strategies.

This approach opens the door to more exhaustive and creative testing, helping uncover obscure bugs that might otherwise remain hidden.

To dig deeper into the Pytest Hypothesis Library, give this article a read!

Introduction to pytest_generate_tests

The pytest_generate_tests hook is a powerful feature provided by the Pytest testing framework that allows you to dynamically generate test cases.

This function allows you to programmatically create test cases based on a variety of conditions, inputs, or scenarios specific to your project’s requirements.

Unlike static parametrization, where test cases are predefined, pytest_generate_tests enables you to determine parameters on-the-fly, which is especially valuable when dealing with complex or evolving codebases.

pytest_generate_tests is called for each test function in the module to give a chance to parametrize it.

OK enough theory, now let’s look at some code.

Project Set Up

Getting Started

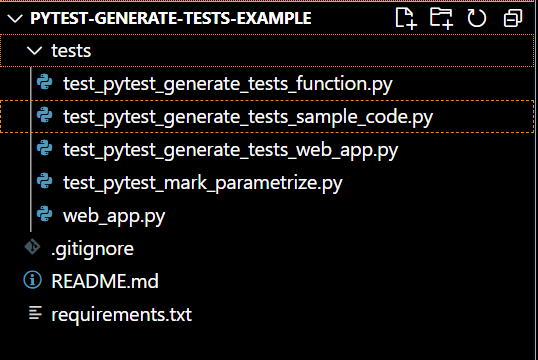

The project has the following structure:

Prerequisites

To achieve the above objectives, the following is recommended:

- Basic knowledge of the Python programming language

- Basics of Pytest and Parameterized Testing

To get started, clone the repo here, or you can create your own repo by creating a folder and running git init to initialise it.

In this project, we’ll be using Python 3.11.

Create a virtual environment and install the requirements (packages) using1

pip install -r requirements.txt

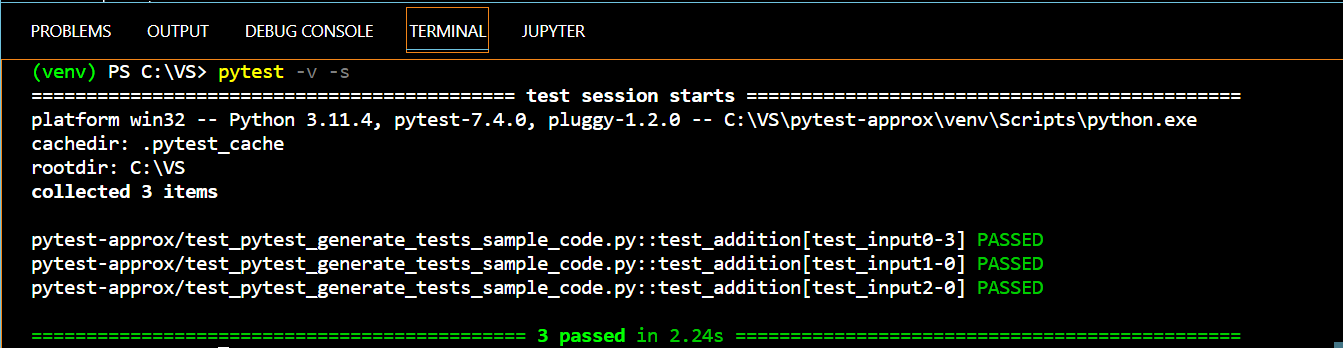

Example 1 - Pytest Generate Tests

To get a better understanding of pytest_generate_tests, let’s look at some example code.

tests/unit/test_pytest_generate_tests_sample_code.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25# sample code to explain how pytest_generate_tests work

import pytest

def add(a, b):

return a + b

# Define the data for test case generation

test_data = [

((1, 2), 3), # Input: (1, 2) | Expected Output: 3

((0, 0), 0), # Input: (0, 0) | Expected Output: 0

((-1, 1), 0), # Input: (-1, 1) | Expected Output: 0

]

# Define the pytest_generate_tests hook to generate test cases

def pytest_generate_tests(metafunc):

if 'test_input' in metafunc.fixturenames:

# Generate test cases based on the test_data list

metafunc.parametrize('test_input,expected_output', test_data)

# Define the actual test function

def test_addition(test_input, expected_output):

result = add(*test_input)

assert result == expected_output, f"Expected {expected_output}, but got {result}"

Explanation

Function Definition:

1 | def add(a, b): |

This is a simple function named add that takes two arguments, a and b, and returns their sum.

Test Data:

1 | test_data = [ |

This list, test_data, consists of pairs where the first element of each pair is a tuple of two numbers to be added and the second element is the expected sum of those numbers.

Generating Test Cases:

1 | def pytest_generate_tests(metafunc): |

The pytest_generate_tests function is a special hook that pytest looks for. It runs before any tests are executed. Its purpose is to generate test cases.

If the name test_input is among the names of required fixtures for a test, the pytest_generate_tests function tells pytest to generate test cases using the test_data list.

The metafunc.parametrize() method takes the names of the fixtures to be filled (test_input and expected_output in this case) and the data to fill them with (test_data).

Test Function:

1 | def test_addition(test_input, expected_output): |

This is the actual test function. When pytest runs:

It will run this test function multiple times, once for each set of inputs and expected outputs in the test_data list.

For each test case, pytest will pass the inputs and expected outputs as arguments to the test function using the names test_input and expected_output.

Inside the test function, the add function is called with the inputs, and its result is compared to the expected output. If they don’t match, the test will fail, and an error message will be displayed.

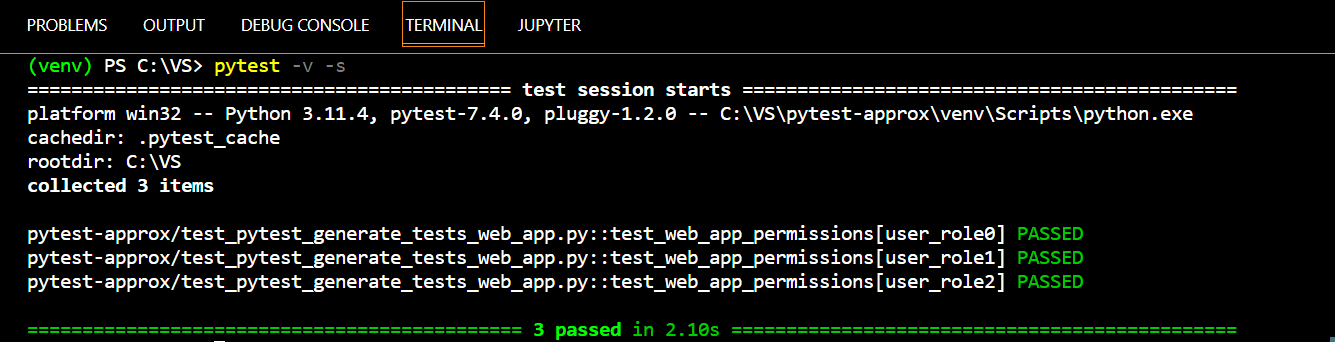

Example 2 - Pytest Generate Tests

Dynamic test case generation, facilitated by tools like pytest-generate-tests, finds valuable applications in various real-world scenarios.

One common use case is in the testing of software that handles data processing or transformation.

Considering web development, dynamic test generation can automate testing across multiple browsers and platforms, saving time and ensuring consistent functionality.

In essence, dynamic test generation serves as a versatile tool to streamline testing efforts, improve code coverage, and enhance software reliability across diverse application domains.

Imagine you are a web developer and want to thoroughly test the web application’s behaviour for different user roles without writing individual test cases for each role.

There are 3 user roles: editor, viewer, and admin, and each has different access rights.

tests/unit/web_app.py1

2

3

4

5

6

7

8

9

10class WebApp:

def __init__(self, user_role):

self.user_role = user_role

def can_edit_content(self):

return self.user_role in ('admin', 'editor')

def can_view_content(self):

return True

The WebApp class is designed to represent a web application and provides methods for checking whether a user with a specific user_role can edit content or view content.

The can_edit_content method restricts editing to users with admin or editor roles, while the can_view_content method allows all users to view content.

tests/unit/test_pytest_generate_tests_web_app.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24import pytest

from web_app import WebApp

# Define user roles and expected permissions

user_roles = [

('admin', True, True),

('editor', True, True),

('viewer', False, True),

]

# Define the pytest_generate_tests hook to generate test cases

def pytest_generate_tests(metafunc):

if 'user_role' in metafunc.fixturenames:

# Generate test cases based on the user_roles list

metafunc.parametrize('user_role', user_roles)

# Test function that uses the dynamically generated test cases

def test_web_app_permissions(user_role):

user_role, can_edit, can_view = user_role # Unpack the fixture value

app = WebApp(user_role)

assert app.can_edit_content() == can_edit, f"Unexpected edit permission for {user_role}"

assert app.can_view_content() == can_view, f"Unexpected view permission for {user_role}"

We define the pytest_generate_tests hook, which is responsible for generating the test cases dynamically.

We check if the fixture named user_role is defined in the test function (which it is in our case), and if so, we use metafunc.parametrize to generate test cases based on the user_roles list.

This means that for each user role defined in the list, a test case will be generated with the corresponding values.

If you are wondering why we haven’t used the fixture decorator in the user role code example, then you should know that while you don’t explicitly define the user_role fixture with the @pytest.fixture decorator, pytest creates it implicitly during test case generation based on the parameterization defined in the pytest_generate_tests hook.

This approach allows you to dynamically generate and provide values for the user_role fixture without needing a separate fixture function.

Comparing pytest.mark.parametrize and pytest_generate_tests

Using pytest.mark.parametrize:

It’s the go-to choice when you have one test function and a set list of inputs.

For instance, if you’re testing a factorial function for just three numbers, you’d use pytest.mark.parametrize. It directly ties specific inputs to your test, making it straightforward and clean.

Using pytest_generate_tests:

This is your pick for more dynamic or complex test setups.

Imagine needing to test various routes to find the shortest path to a bakery. You’d use an algorithm, maybe Dijkstra’s, to determine this.

To test the algorithm’s accuracy across numerous routes and distances, you’d set up pytest_generate_tests. It lets you generate a multitude of test cases, each representing different routes and their respective lengths.

In short, for simple, fixed inputs, use pytest.mark.parametrize. For dynamic or intricate setups, pytest_generate_tests is the way to go.

Understanding Pytest Hooks & Fixtures

In Pytest, “hooks” are special functions or methods that let you modify or extend the testing process. These hooks provide a way to customize Pytest’s behavior, from setting up fixtures to defining custom markers, or executing actions before and after tests.

pytest_generate_tests is one such hook that allows you to dynamically generate test cases.

Power of Fixtures in Pytest

Fixtures are among the standout features in Pytest. They enable you to prepare and supply the necessary data or resources for your tests. When combined with pytest_generate_tests, you can craft and tailor test cases based on the fixtures you’ve set up.

How do you use fixtures? They’re functions marked with @pytest.fixture, which can then be passed into your test functions as parameters.

For a deep dive into fixtures, check out this comprehensive guide on the topic.

Performance Consideration

In terms of speed and efficiency, when deciding between defining parameterized tests using the @pytest.mark.parametrize decorator and utilizing dynamic test generation with pytest-generate-tests, several factors come into play.

Defining parameterized tests may be simpler and sufficient for smaller test suites or straightforward scenarios.

However, as the size of your test suite grows, dynamically generating tests can enhance maintainability and scalability.

Before choosing either of the testing scenarios, consider the complexity of the test cases because there isn’t a one-size-fits-all answer, as both approaches have their strengths and trade-offs.

To make an informed choice, assessing the performance impact of dynamic test generation is crucial, especially in larger projects.

Pytest Benchmark provides a helpful tool to compare execution times between the two approaches.

Learn how to use Pytest Benchmark to gauge your code’s correctness and efficiency.

Ultimately, your decision should balance clarity, maintainability, and performance based on your project’s specific requirements.

Limitations of pytest_generate_tests

While pytest_generate_tests is a powerful feature for dynamic test case generation in pytest, it does have some limitations:

For example, when you need to generate test cases with complex logic or extensive data manipulation, pytest_generate_tests might not be the best fit.

It is more suitable for simpler cases where test parameterization is straightforward.

Moreover, generating tests dynamically can be challenging when your tests depend on other fixtures that are not part of the generated parameters. Handling fixture dependencies can be complex in some cases.

Every programmer’s worst nightmare is when they can’t debug a piece of code.

While using pytest_generate_tests, this nightmare may come true as debugging dynamically generated tests can be trickier, especially when dealing with a large number of test cases.

Identifying the source of a failure may require extra effort.

So, it is suggested that depending on your specific requirements, you may need to use a combination of dynamically generated tests and explicitly defined test functions.

Conclusion and Learnings

OK, that’s a wrap.

In this article, we’ve covered the basics of pytest_generate_tests and its role in Pytest using 2 examples.

We also compared it to the commonly used basic Pytest parameterization technique and how to decide which one to use.

Now you know that pytest_generate_tests is a remarkable tool for automating and simplifying test case generation in the pytest framework.

By harnessing its capabilities, you can streamline testing processes, reduce redundancy, and achieve comprehensive test coverage.

However, success with dynamic test generation also depends on adopting best practices, such as organizing test data, providing clear documentation, and maintaining a balance between automation and maintainability.

Ultimately, pytest_generate_tests empowers you to create more efficient and effective test suites while maintaining code quality.

If you have ideas for improvement or like for me to cover anything specific, please send me a message via Twitter, GitHub or Email.

Till the next time… Cheers!

Additional Learning

Link To Code

https://medium.com/opsops/deepdive-into-pytest-parametrization-cb21665c05b9

https://pytest-with-eric.com/introduction/pytest-parameterized-tests/#Getting-Started https://stackoverflow.com/questions/4923836/generating-py-test-tests-in-python

https://docs.pytest.org/en/latest/how-to/parametrize.html#pytest-generate-tests