13 Proven Ways To Improve Test Runtime With Pytest

Imagine this, you’ve just joined a company and your first task is to optimise the existing test suite and improve the CI/CD run time.

Or maybe you’re a veteran Python developer and with success, your test suite has grown substantially to 1000 tests, maybe 2000 tests?

Now it’s getting out of control and each run takes 5 mins or more. CI/CD Pipelines cost a small fortune.

You’re testing backend APIs, data ETL processes, user authentication, disk writes and so on.

How do you optimize test run time? Where do you start?

Well, in this article you’ll learn 13 (or maybe 14) practical strategies to improve the runtime of your Pytest suite.

No matter how large or small, Pytest expert or novice, you’ll learn tips to implement today.

I’ve also included a bonus tip from the folks over at Discord on how they were able to reduce the median test duration from 20 seconds down to 2 seconds! 😄

Whether its API testing, integration tests with an in-memory database, or data transformation tests, these strategies will help you tackle any slowdown and set a foundation for efficient testing.

You’ll learn how to identify slow tests, profile them, fix bottlenecks, and put in place best practices.

All this and leveraging the power of Pytest features and plugins to your advantage.

Are you ready? Let’s begin.

What You’ll Learn

In this article, you’ll learn

- How to identify slow-running tests in your test suite

- Improve run-time of slow-running tests

- Best practices to leverage Pytest features — fixtures, parametrization and the

xdistplugin for running tests in parallel. - Efficiently handle setup teardown of databases

- Strategies to benchmark and maintain a large growing test suite

Why Should You Worry about Slow Tests?

Let’s ask an important question, why should you worry or care about slow tests?

Is this even a problem? Can’t you just let the tests run for as long as it takes and go do something else?

Well, the answer is no, and here’s why.

Slow Feedback Loop

As test-driven development (TDD) developers, we run tests as part of code development.

A slow test suite is incredibly frustrating and reduces the feedback loop, making you less agile and introducing room for distractions.

While it’s true you can run a single test in Pytest, you also want to make sure your functionality hasn’t broken anything else.

This is also called Regression Testing.

Slow tests delay this feedback, making it harder for you to rapidly identify and fix issues.

Fast tests, on the other hand, enable a smoother and more efficient development cycle, allowing you to iterate quickly

Continuous Integration Efficiency

Slow-running CI Pipelines can be a headache to deal with.

Some pipelines are set up to run on every Git Commit, making feedback painstaking.

Especially if you need to push commits iteratively to fix a stubborn test that passes locally but not on the CI server.

Fast running tests are a blessing.

Cost Implications

Slow tests also have cost implications beyond developer productivity, particularly in the cloud where resources are billed by usage.

Faster tests mean less resource consumption and, therefore, lower operational costs.

Test Reliability and Maintenance

Slow running tests often do so because they are doing too much, or are poorly optimized.

This can lead to reliability issues, where tests fail intermittently or have flaky behaviour.

Ensuring tests run quickly goes hand in hand with making them more reliable and easier to maintain.

Hoping you’re convinced why this is a problem worth addressing, let’s move on to identifying the problem tests.

Identify The Problem Tests

What is the exact problem we’re trying to solve here?

Fix slow tests? Cool.

But how do you know which tests are slow? Are you sure those tests are responsible for the slowdown?

Measure — Before doing any optimization, I strongly advise you to measure test collection and execution time.

After all, you cannot improve what you don’t measure.

Optimize the right tests — While it’s tempting to optimise algorithms using tools like Pytest Benchmark, you need to understand where the low-hanging fruit is.

What tests you can easily make faster, after all you haven’t the time to rewrite every single test.

Profiling Tests

In a large test suite, it’s not a good idea to try and optimize everything at once.

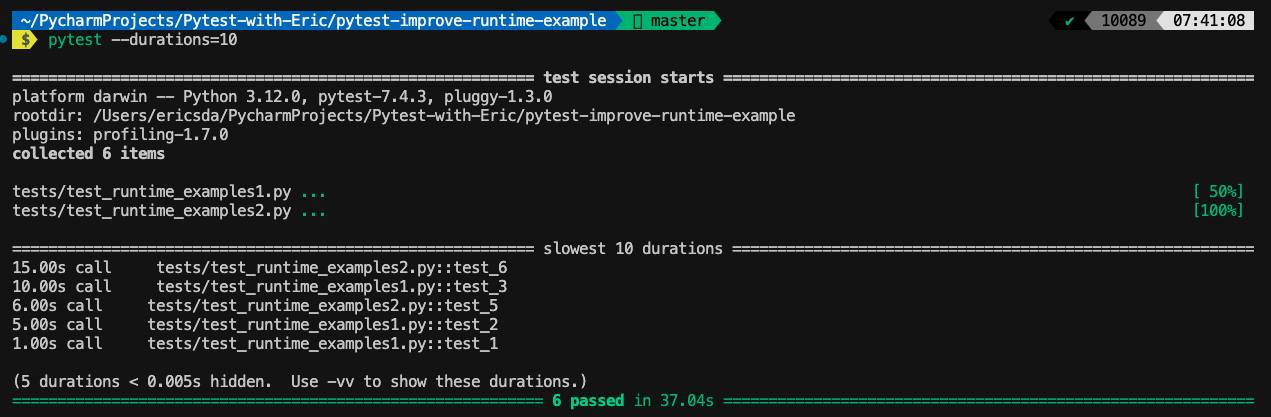

You can first identify the slow-running tests using the --durations=x flag.

To get a list of the slowest 10 test durations over 1.0s long:1

pytest --durations=10 --durations-min=1.0

By default, Pytest will not show test durations that are too small (<0.005s) unless -vv is passed on the command line.

To quickly demo this, I’ve set up a simple project.

We have a simple repo1

2

3

4

5

6

7.

├── .gitignore

├── README.md

├── requirements.txt

└── tests

├── test_runtime_examples1.py

└── test_runtime_examples2.py

In the tests folder, we have some basic tests and I’ve stuck a timer in there to simulate slow-running tests.

tests/test_runtime_examples1.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16import time

def test_1():

time.sleep(1)

assert True

def test_2():

time.sleep(5)

assert True

def test_3():

time.sleep(10)

assert True

Similarly for the other file — test_runtime_examples2.py .

Let’s run the command1

pytest --durations=10

We can see we have a nice report showing which tests took the longest time.

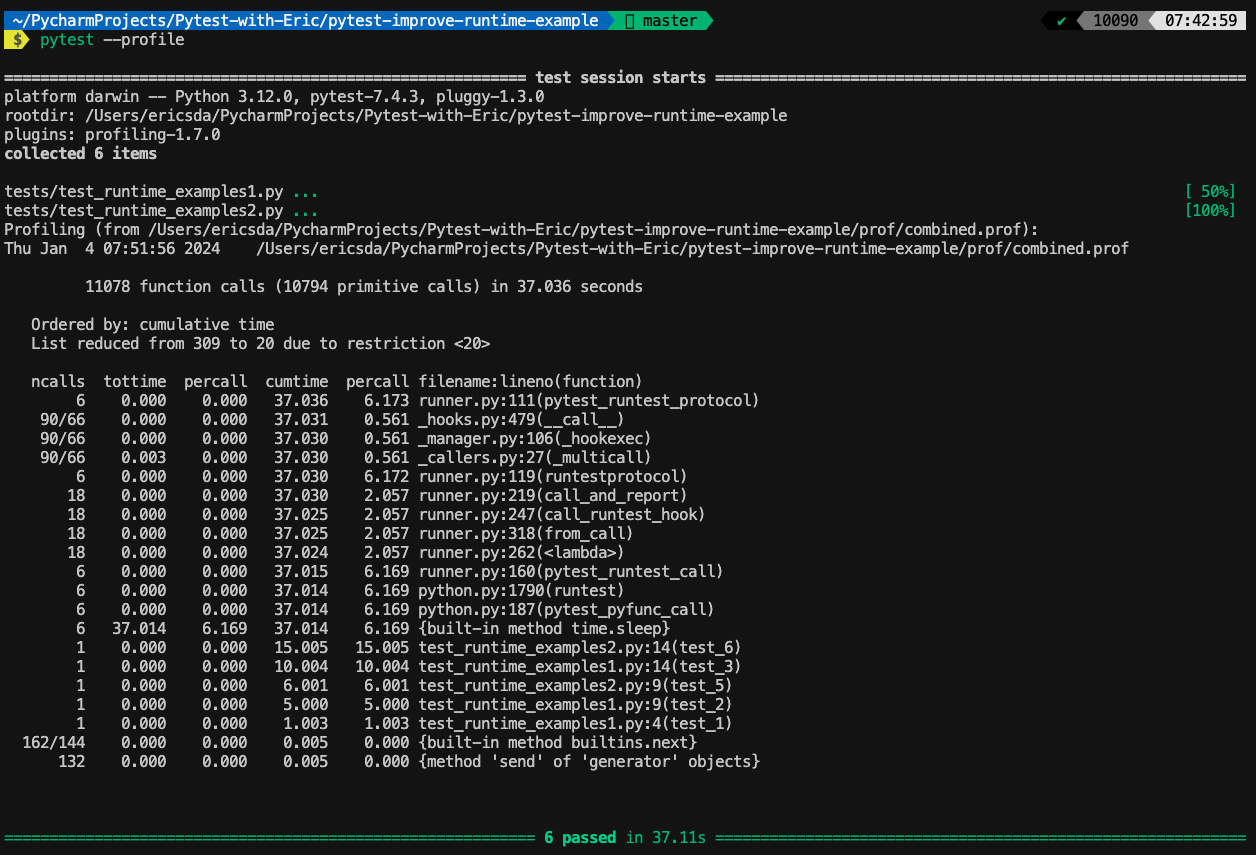

Next, you can also use the pytest-profiling plugin to generate tabular and heat graphs.

After you install the plugin, you can run Pytest with --profile or --profile-svg flags to generate a profiling report.

You can learn more about how to interpret these reports in our article on the 8 Useful Pytest Plugins.

Understanding Test Collection

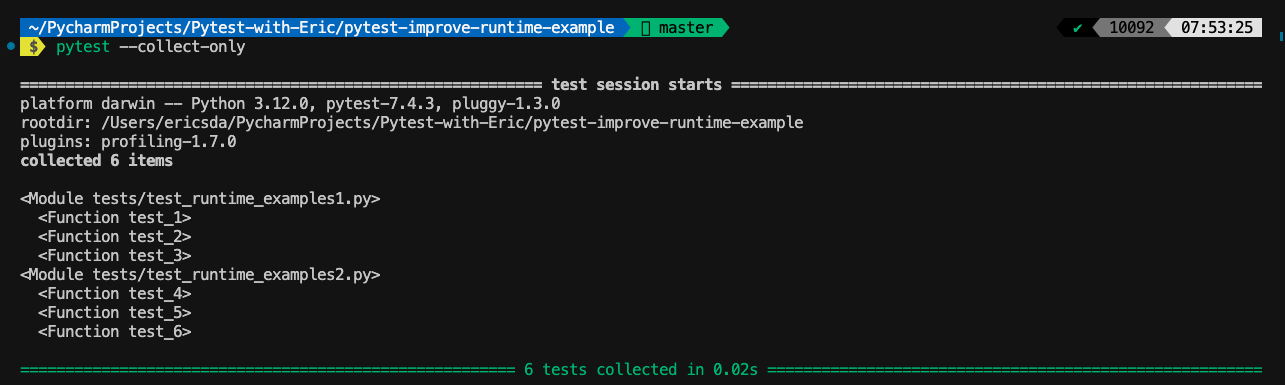

Alongside profiling, it’s also useful to understand how much time Pytest spends collecting your tests.

Thankfully, you can easily do this with the — collect-only flag. This feature only shows you what’s collected and doesn’t run any tests.

You can narrow down by directory level and do more as specified in the docs.

You may not notice huge collection times with small test suites but with larger ones involving thousands of tests, it can easily add up to 30 seconds+.

Btw if you’re experiencing the Pytest Collected 0 Items error, we have a detailed guide on how to solve it here.

This article from Hugo Martins on the improvements to the Pytest Collection feature is also an interesting read.

Solutions Below

Now that you’ve identified the slow-running tests in your suite, it’s time to wrap up your sleeves and take action.

Optimize The Test Collection Phase

In large projects, Pytest may take considerable time just to collect tests before even running them.

This collection phase involves identifying which files contain tests and which functions or methods are test cases.

By optimizing this, you can reduce the startup time of your test runs.

Keyword Expressions:

Use -k to run tests matching a given expression. For example, to run only tests in a specific class:1

pytest -k TestMyClass

File Name Patterns:

Specify particular test files or directories.1

pytest tests/specific_directory/

Define Testpath in Pytest Config File:

You can tell Pytest to look at the tests directory to collect unit tests.

This can help shave off some time when Pytest doesn’t need to look at your source code and any doctests you may have.

You can combine testpaths with other options like python_files to further refine how tests are collected.1

2

3

4# pytest.ini

[pytest]

testpaths = tests/unit tests/integration

python_files = test_*.py

By setting this config in pytest.ini, you gain more control over which tests are executed, keeping your testing workflow efficient.

If you’ve never used pytest.ini or unfamiliar with Pytest config in general, here’s a good starting point.

Avoid External Calls (Use Mocking Instead)

An incredible way to speed up your tests is to mock external dependencies.

Let’s say your tests connect to a real database or get data from an external REST API.

It helps to mock these out as you significantly reduce the test overhead.

By mocking (simulating) the behaviour of time-consuming external systems, such as databases or APIs, you can focus on the functionality you’re testing without the overhead of real system interactions.

This leads to faster, more efficient test execution.

A simple example can be found in the pytest-mock documentation.1

2

3

4

5

6

7

8

9

10

11

12

13import pytest

import os

class UnixFS:

def rm(filename):

os.remove(filename)

def test_unix_fs(mocker):

mocker.patch('os.remove')

UnixFS.rm('file')

os.remove.assert_called_once_with('file')

The UnixFS class contains a static method rm that removes a file using the os.remove function from Python’s standard library.

In the test function test_unix_fs, the mocker.patch method from the pytest-mock plugin is used to replace the os.remove function with a mock.

This mock prevents the actual deletion of a file during the test and allows for the verification of the os.remove call.

This test ensures that UnixFS.rm interacts with the file system as expected, without affecting real files during testing - while being significantly faster than the real thing.

Run Tests In Parallel (Use pytest-xdist)

Another tool in your arsenal is Pytest’s parallel testing capability using the pytest-xdist plugin.

By distributing tests across multiple CPUs or even separate machines, pytest-xdist drastically cuts down the overall runtime of test suites.

This is especially beneficial for large codebases or projects with extensive test coverage.

With pytest-xdist, your tests no longer run sequentially; they run in parallel, utilising resources more effectively and providing faster feedback.

We wrote a whole post on using the pytest-xdist plugin to run tests in parallel so definitely check it out.

Use Setup Teardown Fixtures For Databases

A big optimisation you can implement is once you learn how to use Pytest’s setup teardown mechanism.

This functionality allows you to execute setup and teardown code smoothly at the start or end of your test session or function or class or module.

Fixtures can be controlled using the scope parameter explained at length in this article.

A lot of applications use databases for I/O purposes.

If you’re not mocking, you’ll need to set up and teardown the database for each test.

Some apps may require 50–60 or even more tables. Imagine the overhead.

Nothing stops you from using an in-memory database (like SQLite) and recreating the tables for each test. However, this is super inefficient.

It’s way more efficient to create the database and tables once per test session and just truncate them before each test to start with a fresh slate.

A simple pseudo-code style example is as below using FastAPI and SQLModel.

Database Engine Fixture (Session Scoped)

This fixture creates the database engine, and it’s set to session scope to ensure it runs only once per test session.1

2

3

4

5

6

7

8

9

10

11import pytest

from sqlmodel import SQLModel, create_engine

from fastapi.testclient import TestClient

from your_application import get_app # Import your FastAPI app creation function

def db_engine():

# Replace with your database URL

engine = create_engine('sqlite:///:memory:', echo=True)

SQLModel.metadata.create_all(engine) # Create all tables

return engine

Application Fixture (Function Scoped)

This fixture initializes the FastAPI app for each test function, using the db_engine fixture.1

2

3

4

5

6

7

8

def app(db_engine):

# Modify your FastAPI app creation to accept an engine, if needed

app = get_app(db_engine)

yield app

# Teardown logic, if needed

Table Clearing Fixture (Function Scoped, Auto-Use)

This fixture will clear the database tables after each test. It runs automatically due to autouse=True.1

2

3

4

5

6

7

8

9

def clear_tables(db_engine):

# Clear the tables before the test starts

with db_engine.connect() as connection:

# Assuming SQLModel is used for models

for table in SQLModel.metadata.sorted_tables:

connection.execute(table.delete())

yield # Now yield control to the test

The pseudo code above cleanly demos how you can run a session-scoped db_engine fixture with a function-scoped clear_tables fixture to create tables once and truncate them for each test run.

This means we don’t have to start the db engine and create tables for each test, savings precious time.

Do More With Less (Parametrized Testing)

A cool feature of Pytest is Parametrized Testing - which allows you to test multiple scenarios with a single test function.

By feeding different parameters into the same test logic, you can extensively cover various cases without writing separate tests for each.

This not only reduces code duplication but also allows you to do more with less.1

2

3

4

5import pytest

def test_increment(input, expected_output):

assert input + 1 == expected_output

In this example, the test_increment function is executed three times with different input and expected_output values, testing the increment operation comprehensively with minimal code.

Run Tests Selectively

A somewhat obvious but often overlooked improvement to run your suite faster is to run tests selectively.

This involves running only a subset of tests that are relevant to the changes in each commit, significantly speeding up the test suite.1

2

3

4

5

6# Command to run tests in a specific file

pytest tests/specific_feature_test.py

# Command to run a particular test class or method

pytest tests/test_module.py::TestClass

pytest tests/test_module.py::test_function

You can also set up pre-commit hooks to run the changed files as part of your Git Commit.

Use Markers To Prioritise Tests

Running tests that are more likely to fail or that cover critical functionality first can provide faster overall feedback.

A neat way is to organize tests in a way that prioritizes key functionalities.

You can leverage Pytest markers to order tests based on your criteria — e.g. fast, slow, backend, API, database, UI, data, smoke, recursion and so on.

Efficient Fixture Management

A fast and easy win, though one that must be carefully considered and implemented is the use of Pytest fixtures with the correct scope.

We briefly touched on Pytest fixture scopes in our point on using setup teardown for databases including the autouse=True parameter.

Read more about fixture autouse here.

Proper use of fixtures, especially those with scope=session or scope=module, can prevent repeated setup and teardown.

Analyze your fixtures and refactor them for optimal reuse using the best scope.

Avoid Sleep or Delay in Tests

Using sleep in tests can significantly increase test time unnecessarily.

Replace sleep with more efficient waiting mechanisms, like polling for a condition to be met.

Configure Pytest Config Files Correctly

Optimizing configuration files like pytest.ini and conftest.py can significantly enhance the runtime efficiency of your test suite.

You can fine-tune these files for better performance.

Optimizing pytest.ini

By default, Pytest looks for test files in the current and all sub-directories. You can specify the

testpathsparameter to point to any test folders.1

2

3

4[pytest]

testpaths =

tests

integrationDisable or reduce Pytest verbosity.

Avoid unnecessary captured logs (caplog) and outputs (capsys). If you’re unfamiliar, you can read more about how to capture logs using Pytest caplog and capturing stdout/stderr here. Disabling output capture where unnecessary can help optimise runtime.

Defining custom markers in

pytest.inican help you selectively run tests. Define markers for different categories (e.g.,smoke,slow) and use them to include or exclude tests during runtime (pytest -m "smoke").Drop unnecessary environment variables.

Optimizing conftest.py

- Drop unused fixtures and avoid repeat setup/teardown using the

scopeparameter. - Use the

pytest_addoptionandpytest_generate_testshooks to add custom command-line options and dynamically alter test behaviour or parameters based on these options. - If your tests rely on data files, the way you load them can impact performance. Consider lazy loading or caching strategies for test data in fixtures to avoid unnecessary I/O operations.

Plugin Housekeeping

Ditch unused plugins as Pytest has to load these in every time it runs and can slow down your suite.

Perform regular cleanups of your requirements.txt , pyproject.toml or other config and dependency files to keep things lean.

Plugins like pytest-incremental can be useful to help detect and run changed test files.

This allows for a faster feedback loop.

Reduce or Limit Network Access

Network calls in tests, such as requests to web services, databases, or APIs, can significantly slow down your test suite.

These calls introduce latency, potential for network-related failures, and dependency on external systems, which might not always be desirable.

Consider selective mocking practices where it makes sense, such as in unit tests. Integration tests might still need to make real network calls.

Plugins like pytest-socket can prevent inadvertent internet access with the option to override when necessary.

Use Temporary Files and Directories

Relying on the filesystem introduces potential errors due to changes in file structure or content, and can slow down tests due to disk read/write operations and system constraints.

To address this, you can mock file systems using the mocker fixture provided by the pytest-mock plugin, built atop the unittest.mock library.

Other strategies may include using In-Memory Filesystems like pyfakefs that create a fake filesystem in memory, mimicking real file interactions.

A useful strategy is to leverage Pytest built-in fixtures like tmp_path and tmpdir that provide temporary file and directory handling for test cases.

If you’re not familiar with how to use these, we’ve written a step-by-step guide on how to use the tmp_path fixture.

Bonus - Consider Setting Up A Pytest Daemon (Advanced)

I came across an interesting read from Discord while writing this article.

It’s titled “PYTEST DAEMON: 10X LOCAL TEST ITERATION SPEED” — which curiously peeked my attention.

The author goes on to describe how the folks at Discord were able to reduce the median test duration from 20 seconds down to 2 seconds!

That’s very impressive!

By setting up a “pytest daemon” and “hot reloading” the changed files using the importlib.reload(module) library, they achieved better runtimes.

In the article, they also referred to this pytest-hot-reloading plugin from James Hutchinson.

It looks pretty cool and I may write an article in the future on how to use it so don’t forget to bookmark this website.

Conclusion

OK, these are all the tips I have for you right now.

I’ll update with more strategies as I come across them in my own experience and learnings.

In this article, you learned several ways to profile, measure and optimise the runtime speed of your test suite.

From strategies like parallel testing to database setup teardown, mocking to cleaning up plugins, and fixtures to using custom daemons — there are many ways to optimise Pytest test runs.

It’s best to start with collection and profiling so you know exactly what is taking the most time — is it test collection, one particular test module, sleep, wait, or external network calls?

Whatever it is, there’s most likely an optimisation that exists. It’s just up to you to invest time and save yourself some runtime and money.

With this knowledge in mind, you can now write and design your test strategy from the ground, without the need to carry out massive refactors.

If you have ideas for improvement or like me to cover anything specific, please send me a message via Twitter, GitHub or Email.

Till the next time… Cheers!

Additional Reading

This article was inspired by interesting posts shared by some wonderful people who were happy to share their ideas and practices.

Profiling and improving the runtime of a large pytest test suite

GitHub - zupo/awesome-pytest-speedup: A collection of tips, tricks and links to help you speed up..

# Day 3 - Track 2 - Fast tests

pytest daemon: 10X Local Test Iteration Speed

Improving pytest’s –collect-only Output

How To Run A Single Test In Pytest (Using CLI And Markers)

Maximizing Quality - A Comprehensive Look at Testing in Software Development

How To Measure And Improve Code Efficiency with Pytest Benchmark (The Ultimate Guide)

8 Useful Pytest Plugins To Make Your Python Unit Tests Easier, Faster and Prettier

A Simple Guide to Fixing The ‘Pytest Collected 0 Items’” Error

How to Effortlessly Generate Unit Test Cases with Pytest Parameterized Tests

What is Setup and Teardown in Pytest? (Importance of a Clean Test Environment)

What Are Pytest Fixture Scopes? (How To Choose The Best Scope For Your Test)

Save Money On You CI/CD Pipelines Using Pytest Parallel (with Example)

Python REST API Unit Testing for External APIs

Introduction to Pytest Mocking - What It Is and Why You Need It

What Is pytest.ini And How To Save Time Using Pytest Config

How To Run A Single Test In Pytest (Using CLI And Markers)

Ultimate Guide To Pytest Markers And Good Test Management

How to Auto-Request Pytest Fixtures Using “Autouse”

How To Debug Failing Tests Like A Pro (Use Pytest Verbosity Options)

What Is Pytest Caplog? (Everything You Need To Know)

The Ultimate Guide To Capturing Stdout/Stderr Output In Pytest

A Beginner’s Guide To pytest_generate_tests (Explained With 2 Examples)

How To Manage Temporary Files with Pytest tmp_path