Save Money On You CI/CD Pipelines Using Pytest Parallel (with Example)

So you’re a backend developer or data engineer and probably stumbled across this article when looking to speed up your Unit Tests.

TDD (Test driven development) is the practice of writing unit tests alongside the actual source code.

Why? Because it makes you think of edge cases and functionality you generally don’t think of when writing source code.

That’s great. But what about running these tests? Maybe 100s of tests?

it could be that you’re running tests against variable sample data and it’s taking too long.

One way to speed up your Unit Tests is by using pytest monkeypatch or mocking.

While that’s good for some use cases, the real perfornance increases come from running tests in parallel. And that’s probably why you’re here.

In this article, we’ll see why parallelization is a good idea, measuring execution time and how to parallelize unit tests using pytest, taking advantage of the pytest-xdist library.

All with a Real Example.

- Problems With Huge # Of Unit Tests

- Longer Development Cycle

- Higher Computation Time/Costs, Slower CI/CD Pipeline

- Measure Unit Test Execution Times In Pytest?

- Parallelize Your Tests — Real Example

- Conclusion

Let’s begin

Problems With Huge # Of Unit Tests

A mono-repo shouldn’t be a thing.

But in reality, the world is not perfect, and source code isn’t always nicely split into tiny repositories.

There are often a lot of interdependencies even in Domain Driven Design, which leads to a lot of source code.

Source code require Unit Tests.

Hence running large numbers of Unit Tests as part of local testing or a CI/CD Pipeline is often a reality and challenging.

Why? Let’s see below

Longer Development Cycle

With Pytest and most Unit Testing Frameworks, you can conveniently run only the test you need.

For example, using the command1

pytest ./tests/unit/test-*.py::<TEST_NAME> -v -s

So that’s good. But what if you need to run all the tests for whatever reason — maybe as part of a pre-commit-hook or CI/CD Pipeline.

If you have 100s of tests and run them sequentially this could take ages and rack up the bill.

We’ll take a look at an example below and see the difference in execution time when running tests sequentially and in parallel.

Higher Computation Time/Costs, Slower CI/CD Pipeline

A lot of the heavy lifting in running Unit Tests falls on your CI/CD Pipeline.

Whether you use GitHub Actions, Jenkins, CodeBuild, all pricing is based on execution time.

It’s not rocket science to conclude how your execution time will increase with more tests, especially when you run tests sequentially.

Another reason to parallelize your Unit Tests will save you time and money.

Lastly, it will also speed up your CI/CD Pipeline. Means faster releases and deployments to Production.

Now that you’re convinced, let’s look at how to measure the execution time followed by an example.

Measure Unit Test Execution Times In Pytest?

Say you have several Unit Tests and wanna know how long each one takes.

Here’s a helpful command you can run to get the execution time per test.1

pytest ./tests/unit/ -v -s --durations=0

You can tweak the --durations flag to see the slowest or n slowest flag as detailed in the documentation here.

Parallelize Your Tests — Real Example

OK now that we’ve reviewed the concepts and are convinced of the benefits, let’s look at how to do this with code.

If you navigate to the repo and in the source code folder /parallel_examples/ we have the file core.py .

In this bit of code, we have 3 functions.

odd_numers()— Given a range ofnnumbers, add the odd numbers to a list.even_numbers()— Given a range ofnnumbers, add the even numbers to a list.all_numbers()— Given a range ofnnumbers, add all numbers to a list.

core.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31def odd_numbers(size: int) -> list:

"""

Function to add Odd Numbers to a list

:param size: Maximum value, Type - int

:return: List

"""

number_list = []

[number_list.append(n) for n in range(0, size) if n % 2 != 0]

return number_list

def even_numbers(size: int) -> list:

"""

Function to add Even Numbers to a list

:param size: Maximum value, Type - int

:return: List

"""

number_list = []

[number_list.append(n) for n in range(0, size) if n % 2 == 0]

return number_list

def all_numbers(size: str | int) -> list:

"""

Function to add All Numbers to a list

:param size: Maximum value, Type - int

:return: List

"""

number_list = []

[number_list.append(n) for n in range(0, size)]

return number_list

The Unit Tests are defined in the /tests folder.

test_parallel_examples.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27from parallel_examples.core import odd_numbers, even_numbers, all_numbers

size = 50000000

def test_odd_numbers() -> None:

"""

Test to add Odd Numbers to a List

:return: None

"""

result = odd_numbers(size=size)

def test_even_numbers() -> None:

"""

Test to add Even Numbers to a List

:return: None

"""

result = even_numbers(size=size)

def test_all_numbers():

"""

Test to add All Numbers to a List

:return: None

"""

result = all_numbers(size=size)

Even though we’re not asserting anything, as you can see in the tests, we’ve set n=50000000. This is because at a low n (microsecond execution time) we can’t really see the difference.

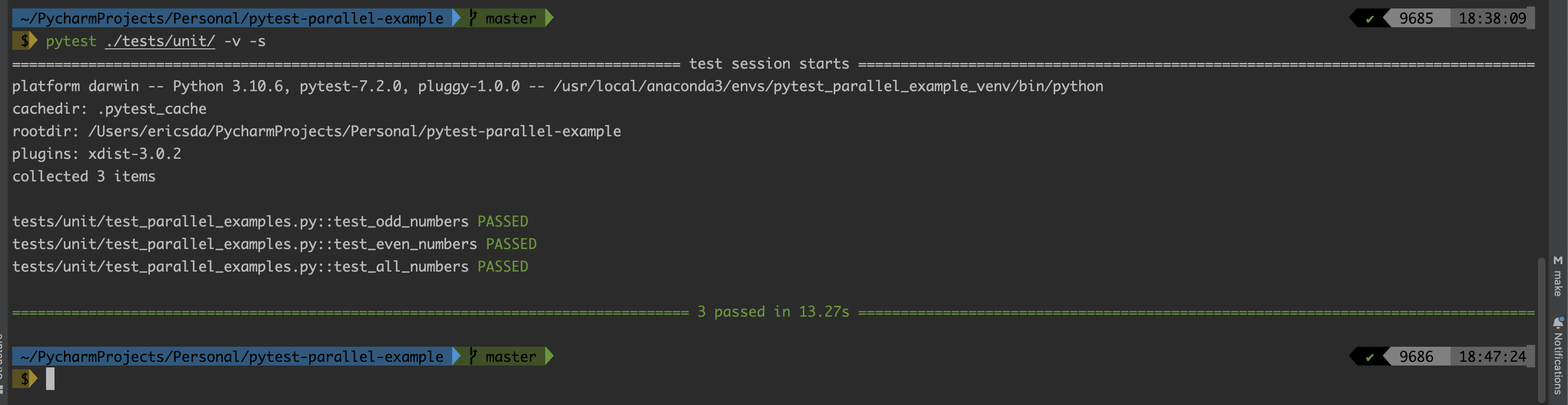

Now when we run the test using1

pytest ./tests/unit/ -v -s

We can see that the tests took 13.27 seconds to pass.

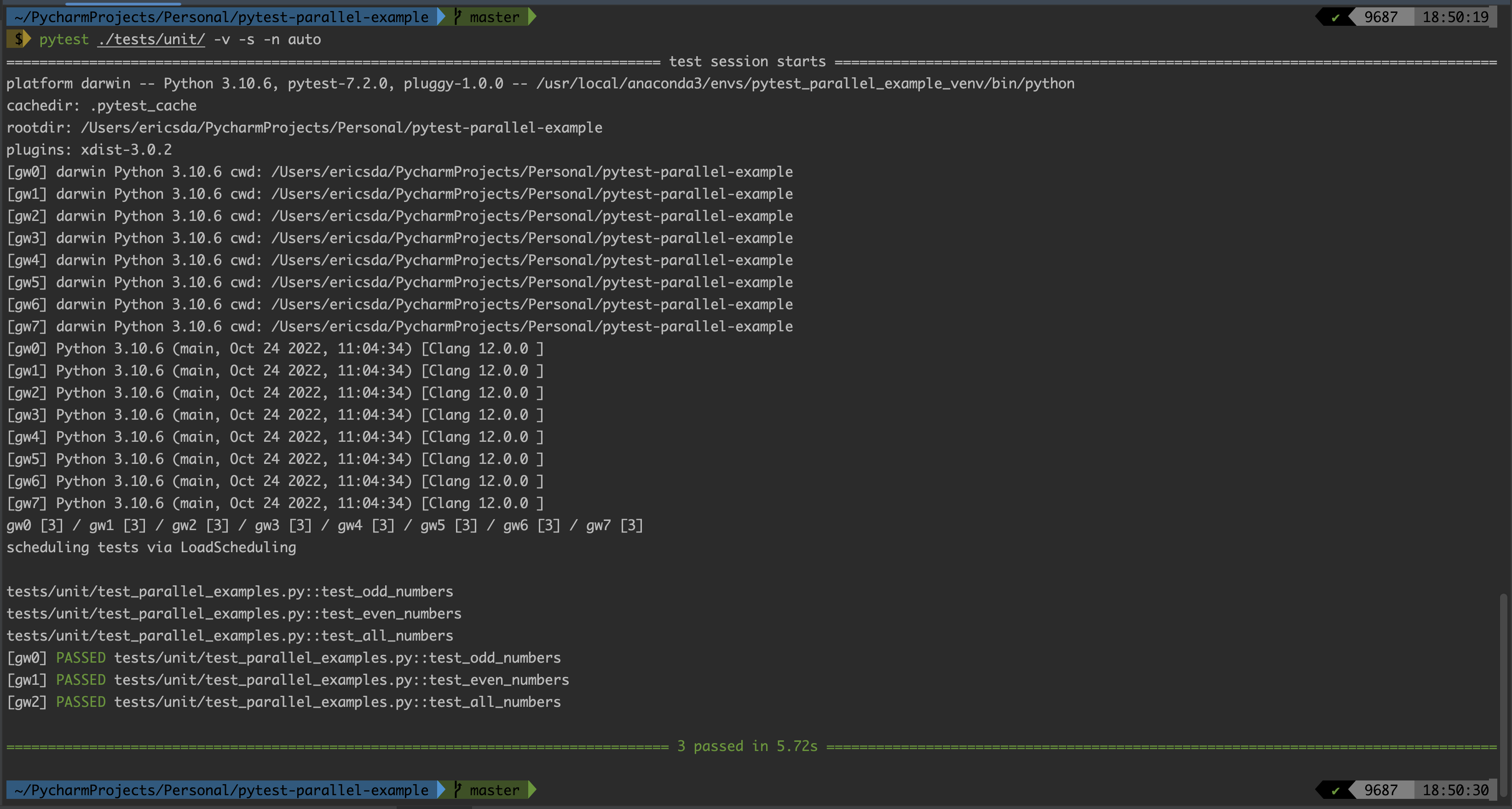

Now let’s run them in parallel, using the pytest-xdist library.1

pytest ./tests/unit/ -v -s -n auto

Note - we specify the flag -n auto to let the library decide the appropriate number of runners based on the machine specification.

The tests took 5.72 seconds. That’s a 79% reduction in time.

These results may vary based on your system, but as you scale you will be able to see considerable differences.

You can try increasing n further and testing the execution times. You’ll be surprised by how much you can cut the execution time.

For 100s of Unit Tests across your CI/CD pipelines, that could add up to a valuable saving.

Conclusion

I hope this article was helpful and you learnt how to parallelize your Python Unit Tests to hopefully save execution time and $$.

We looked at some of the problems faced when running unit tests sequentially like high costs and longer CI/CD execution time.

We looked at a real example and measured the difference in execution time, with a substantial 79% increase coming from parallel execution.

Really excited to see you apply these to your unit tests at work or personal projects.

In subsequent articles, we’ll explore more strategies to further improve Unit Test execution time and simplicity.

Till the next time… Cheers!

Additional Reading

how-to-measure-unit-test-execution-times-in-pytest

Test Parallelization Using Python and Pytest

_Learn how to effortlessly run tests in parallel to reduce CI build times._python.plainenglish.io