An Ultimate Guide To Using Pytest Skip Test And XFail - With Examples

Have you had to ship code without fully functional Unit Tests?

In an ideal world, you have all the time in the world to write thorough Unit and Integration tests.

But the real world wants features and bug fixes, like yesterday. This presents a challenge.

Fortunately, can “skip” or “fail” tests that are related to an upcoming feature or bug-fix and not yet ready.

Or perhaps you don’t want to run some tests every single time (e.g. connection to an external DB or API).

Pytest Skip Test and XFail functionality allow you conveniently skip or fail a test intentionally.

We’ll look at several reasons why you’d skip or fail a unit test and how to do it, with a real example.

We’ll explore conditional and unconditional skipping as well as the risks, pros and cons of skipping or failing Unit Tests .

- Why Skip A Unit Test?

- Incompatible Python or Package Version

- Platform Specific (Windows, Mac, Linux)

- External Resource Dependency — Databases or APIs

- Local Dependencies

- Pre-Commit Hooks & Local AWS Profiles

- Pytest Skip Test — Examples

- Quick Repo/Code Explanation

- Unconditional Skipping

- Skip Single Test Function

- Skip Test Class

- Skip Test Function — Using Marker within Test Body

- Conditional Skipping

- Skip If Python Version ≥ X

- Skip All Tests

- Skip If Module Import Fails

- Skip On Platform

- XFail — Basic Usage Example

- XFail vs Skip Test

- Conclusion

- Additional Reading

Let’s get started then?

Why Skip A Unit Test?

Perhaps you’re asking yourself why on earth would you want to skip a Unit Test.

If you’re going to skip it, why write it in the first place?

I asked myself the same question until I dived into the use cases where skipping or intentionally failing unit tests made sense.

Let’s take a look.

Incompatible Python or Package Version

A lot of applications may have been built on earlier versions of Python or another package and may not work on newer versions. Or vice versa.

Although most MINOR or PATCH releases are backwards compatible, MAJOR releases can often be non-compatible leading to Unit Test failures.

Platform Specific (Windows, Mac, Linux)

Let’s say (and often the case) that some colleagues use a Windows machine while others develop on Mac OSX or Linux.

There may be instances where your Unit Tests don’t run on Windows.

Or where a test is explicitly designed to run on Windows.

Either way, there is a use case if necessary.

External Resource Dependency — Databases or APIs

If your Unit Tests require a connection to an external database or API and you don’t want to run it every time, you could skip or fail the test.

Other ways to achieve this could include pytest monkeypatching or mocking.

This article on Python Rest API Unit Testing covers various Rest API testing methods in detail.

Or if the external API is unavailable and you need to deploy your application with a patch for the time being, intentionally failing or skipping a test can be a good workaround.

Local Dependencies

Developers often test and run unit tests with local dependencies e.g. local files or uncommitted folders.

These may be essential as part of your local development but won’t run as part of your CI/CD pipeline or your colleague’s computer.

This is a good reason to skip a test.

Although you must always maintain code portability and and keep it environment agnostic.

Pre-Commit Hooks & Local AWS Profiles

A popular AWS Unit Testing Library Moto uses the default AWS CLI profile and some developers who don’t maintain a default CLI profile may get errors when pushing code to GitHub.

Thankfully pre-commit hooks like detect-aws-crendentials exist that make life easier.

This could be one reason to skip the test (although not recommended).

Pytest Skip Test — Examples

Having looked at various reasons why you might consider skipping a Unit Test, let’s look at HOW to do this with a few examples for Unconditional and Conditional Skipping.

Quick Repo/Code Explanation

In our example repo we have 2 key methods defined in the module interest_calculator .

The core.py file contains 2 methods — Simple Interest Calculator and Compound Interest Calculator.

We’ve deliberately kept this simple so we can focus on the syntax for the pytest skip test and xfail rather than the complexity of the source code.

Several Unit Tests are defined under the /tests/unit directory.

core.py1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36def simple_interest(

principal: int | float, time_: int | float, rate: int | float

) -> int | float:

"""

Function to calculate Simple Interest

:param principal: Principal Amount

:param time_: Time

:param rate: Rate of Interest

:return: Simple Interest Value

"""

logger.debug(f"Principal - {principal}")

logger.debug(f"Time - {time_}")

logger.debug(f"Rate - {rate}")

si = (principal * time_ * rate) / 100

logger.info(f"The Simple Interest is {si}")

return si

def compound_interest(

principal: int | float, time_: int | float, rate: int | float

) -> int | float:

"""

Function to calculate Compound Interest

:param principal: Principal Amount

:param time_: Time

:param rate: Rate of Interest

:return: Compound Interest Value

"""

logger.debug(f"Principal - {principal}")

logger.debug(f"Time - {time_}")

logger.debug(f"Rate - {rate}")

ci = (principal * (1 + rate / 100)) ** time_

logger.info(f"The Compound Interest is {ci}")

return ci

Unconditional Skipping

First let’s look at how we can skip tests unconditionally.

Skipping tests unconditionally uses the @pytest.mark.skip marker. You can (and should) also add a reason argument.

In the file test_interest_calculator.py, the first 3 tests are unconditionally skipped.

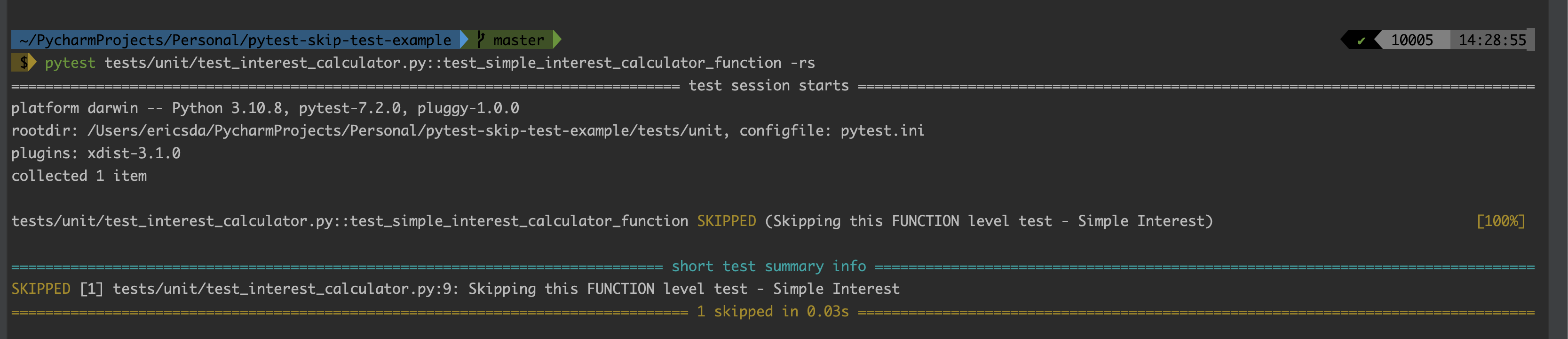

Skip Single Test Function

You can skip a Test function like this. You have to just place a marker before the individual Unit Test. Note the use of the -rs argument to print the reason.

test_interest_calculator.py1

2

3

4

def test_simple_interest_calculator_function() -> None:

value = simple_interest(8, 6, 8)

assert value == 3.84

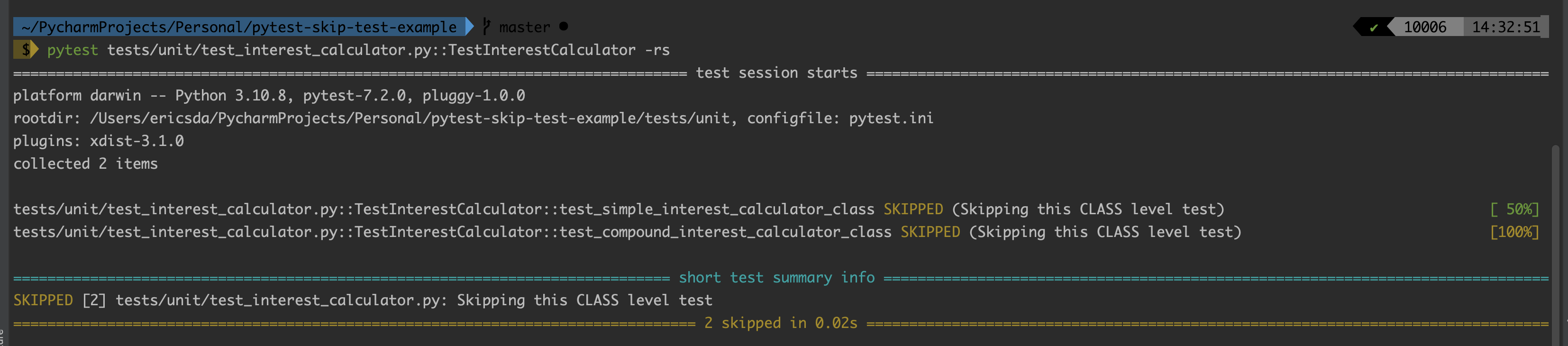

Skip Test Class

You can also skip an entire Test Class suite like this

test_interest_calculator.py1

2

3

4

5

6

7

8

9

class TestInterestCalculator:

def test_simple_interest_calculator_class(self) -> None:

value = simple_interest(8, 6, 8)

assert value == 3.84

def test_compound_interest_calculator_class(self) -> None:

value = compound_interest(10000, 2, 5)

assert value == 110250000.0

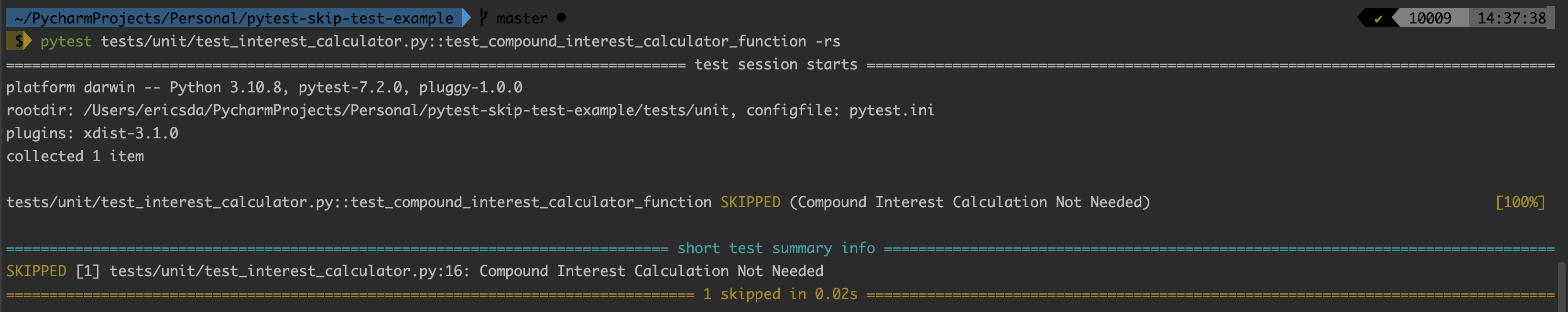

Skip Test Function — Using Marker within Test Body

You can also skip a test by including a pytest.skip() marker within the test body as in this case

test_interest_calculator.py1

2

3

4def test_compound_interest_calculator_function() -> None:

pytest.skip("Compound Interest Calculation Not Needed")

value = compound_interest(10000, 2, 5)

assert value == 110250000.0

Just make sure to add the --rs flag to your Pytest command in case you don’t see the reason.

Always include a reason, never assume people will figure out why you chose to skip or fail a Unit Test.

Conditional Skipping

Now that we’ve explored unconditional skipping, let’s look at how to implement Pytest skip test based on specific conditions.

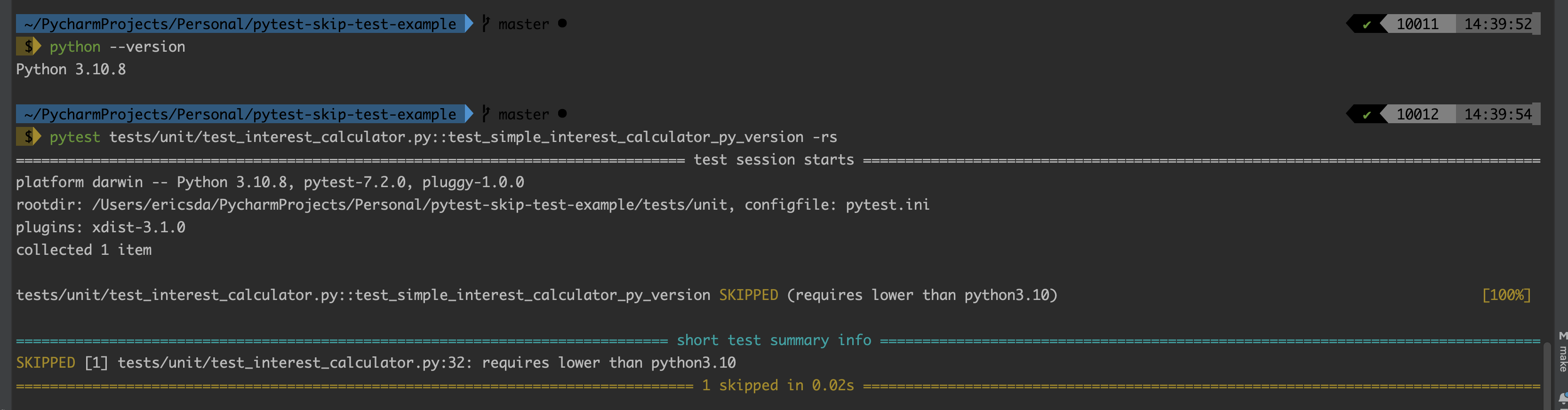

Skip If Python Version ≥ X

As we said before some legacy applications may use older versions of Python or perhaps you may have built something new that requires ≥ Python 3.11.

test_interest_calculator.py1

2

3

4

5

)

def test_simple_interest_calculator_py_version() -> None:

value = simple_interest(8, 6, 8)

assert value == 3.84

Here’s an example where we wish to skip the test if we’re running Python ≥ 3.10.

This works both ways — legacy or new applications, offering immense flexibility.

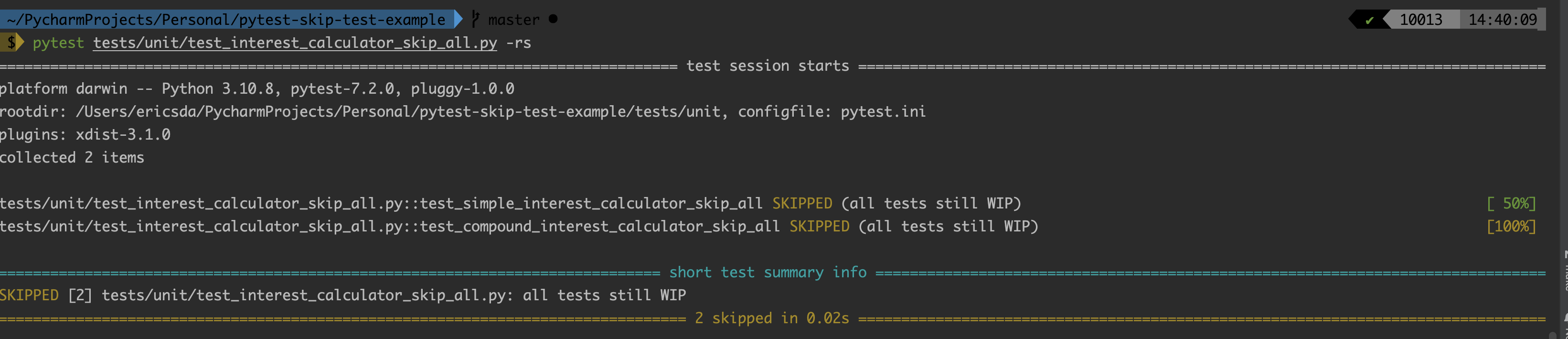

Skip All Tests

In the event that you have a work-in-progress Unit Test file and wish to skip all the tests, you can do so using the pytest marker at the top of the test file.

pytestmark = pytest.mark.skip(“all tests still WIP”)

test_interest_calculator_skip_all.py1

2

3

4

5

6

7

8

9

10pytestmark = pytest.mark.skip("all tests still WIP")

def test_simple_interest_calculator_skip_all() -> None:

value = simple_interest(8, 6, 8)

assert value == 3.84

def test_compound_interest_calculator_skip_all() -> None:

value = compound_interest(10000, 2, 5)

assert value == 110250000.0

This will skip ALL unit tests in this test file.

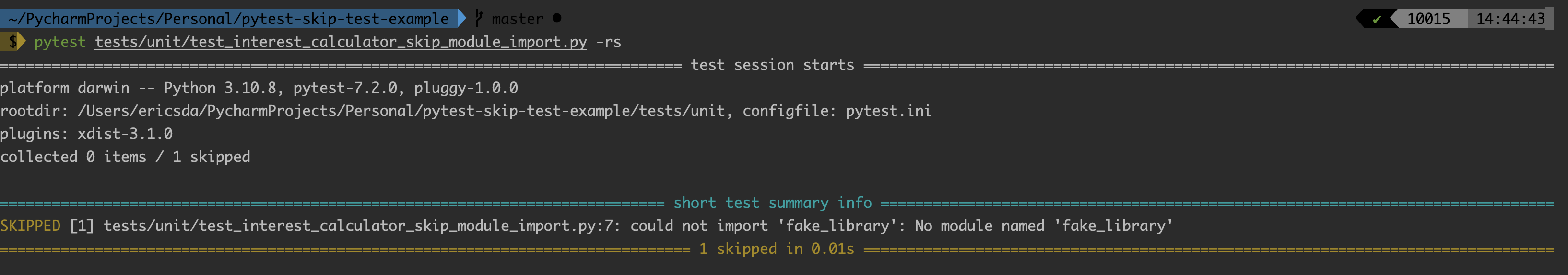

Skip If Module Import Fails

Another reason we may want to skip a test is if an import module fails.

This could be particularly useful in the case of a really important external dependency that for whatever reason is unavailable.

test_interest_calculator_skip_module_import.py1

2

3

4

5

6

7

8

9

10

11fake_library = pytest.importorskip("fake_library")

def test_simple_interest_calculator_module_import() -> None:

value = simple_interest(8, 6, 8)

assert value == 3.84

def test_compound_interest_calculator_module_import() -> None:

value = compound_interest(10000, 2, 5)

assert value == 110250000.0

As expected the fake_library does not exist so the whole test file fails.

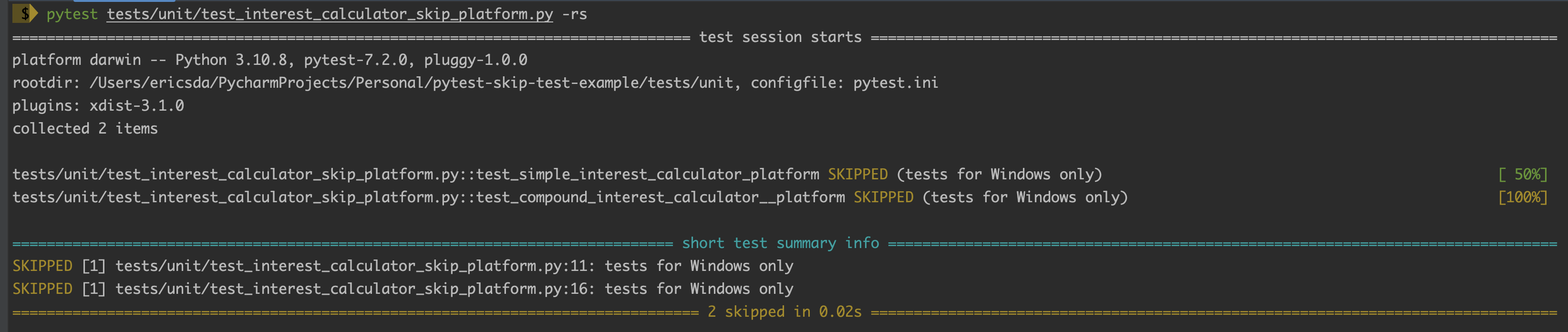

Skip On Platform

Similar to the other test cases, we can skip a test if running on Mac, or have it only run on Mac and skip on Windows.

The possibilities are endless.

test_interest_calculator_skip_platform.py1

2

3

4

5

6

7

8

9

10

11pytestmark = pytest.mark.skipif(sys.platform == "darwin",

reason="tests for Windows only")

def test_simple_interest_calculator_platform() -> None:

value = simple_interest(8, 6, 8)

assert value == 3.84

def test_compound_interest_calculator__platform() -> None:

value = compound_interest(10000, 2, 5)

assert value == 110250000.0

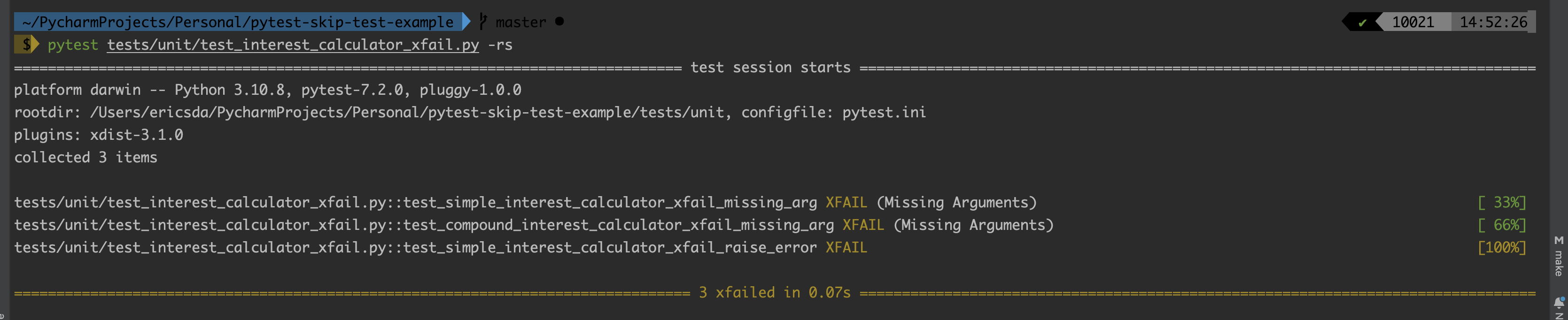

XFail — Basic Usage Example

Now that we’ve looked at skipping tests, let’s take a look at how to intentionally fail a test.

You ask why?

A couple of valid reasons may include — A Bug fix yet to be implemented or a new feature yet to be released.

In our code example, we’ve implemented this for a simple Missing Argument use case.

Note: This is purely for illustration and in your source code, you’ll want to catch and handle as many exceptions as possible and not just expect missing them fail.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

def test_simple_interest_calculator_xfail_missing_arg() -> None:

value = simple_interest(8) # Missing Argument - FAIL

assert value == 3.84

def test_compound_interest_calculator_xfail_missing_arg() -> None:

value = compound_interest(2, 5) # Missing Argument - FAIL

assert value == 110250000.0

def test_simple_interest_calculator_xfail_raise_error() -> None:

value = simple_interest(8) # Missing Argument - FAIL - Raise Type Error

assert value == 3.84

XFail vs Skip Test

So a big question is Should you use XFail or Skip Test to exclude tests from running?

That is entirely up to you although I personally prefer failing tests intentionally rather than skipping them.

The reason for this is that failed tests show up on your Pytest Coverage Report.

This helps you achieve a higher % test coverage.

Important — Whatever you choose, make sure you’re only skipping or failing tests when it’s absolutely necessary. This is not an excuse for poor Test Driven Development.

Conclusion

This has been an interesting article where we explored how to use the Pytest Skip and Xfail functionality to skip or fail Unit Tests where necessary.

We looked at how to skip tests using a real example for a simple and compound interest calculator.

Several ways include the use of markers, within the unit test, at a module or class level as well as skipping entire test files.

We looked at conditional and unconditional skips.

Some reasons to skip include — platform or Python version incompatibility, module dependency and more.

Lastly, we looked at how you can use Xfail to intentionally fail a test instead of skipping it, thus contributing to test coverage %.

If you have any ideas for improvement or like me to cover any topics please comment below or send me a message via Twitter, GitHub or Email.

Till the next time… Cheers!

Additional Reading

How to use skip and xfail to deal with tests that cannot succeed - pytest documentation

Pytest skipif: Skip a Test When a Condition is Not Met - Data Science Simplified

Pytest options - how to skip or run specific tests - qavalidation